Walking up stairs

The BRAWL² Tournament Challenge has been announced!

It starts May 12, and ends Oct 17. Let's see what you got!

https://polycount.com/discussion/237047/the-brawl²-tournament

It starts May 12, and ends Oct 17. Let's see what you got!

https://polycount.com/discussion/237047/the-brawl²-tournament

Best Of

Re: The Bi-Monthly Environment Art Challenge | January - February (100)

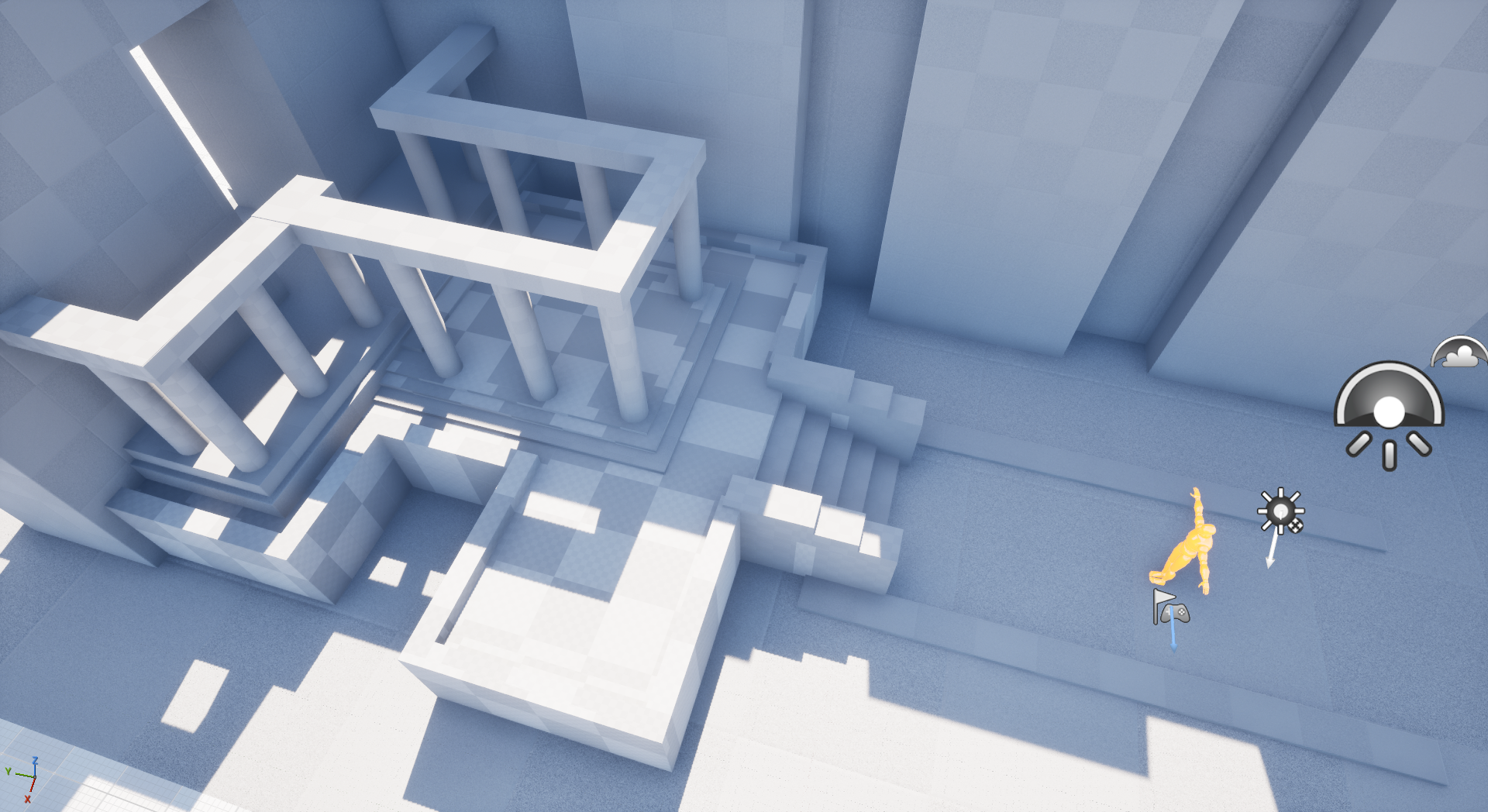

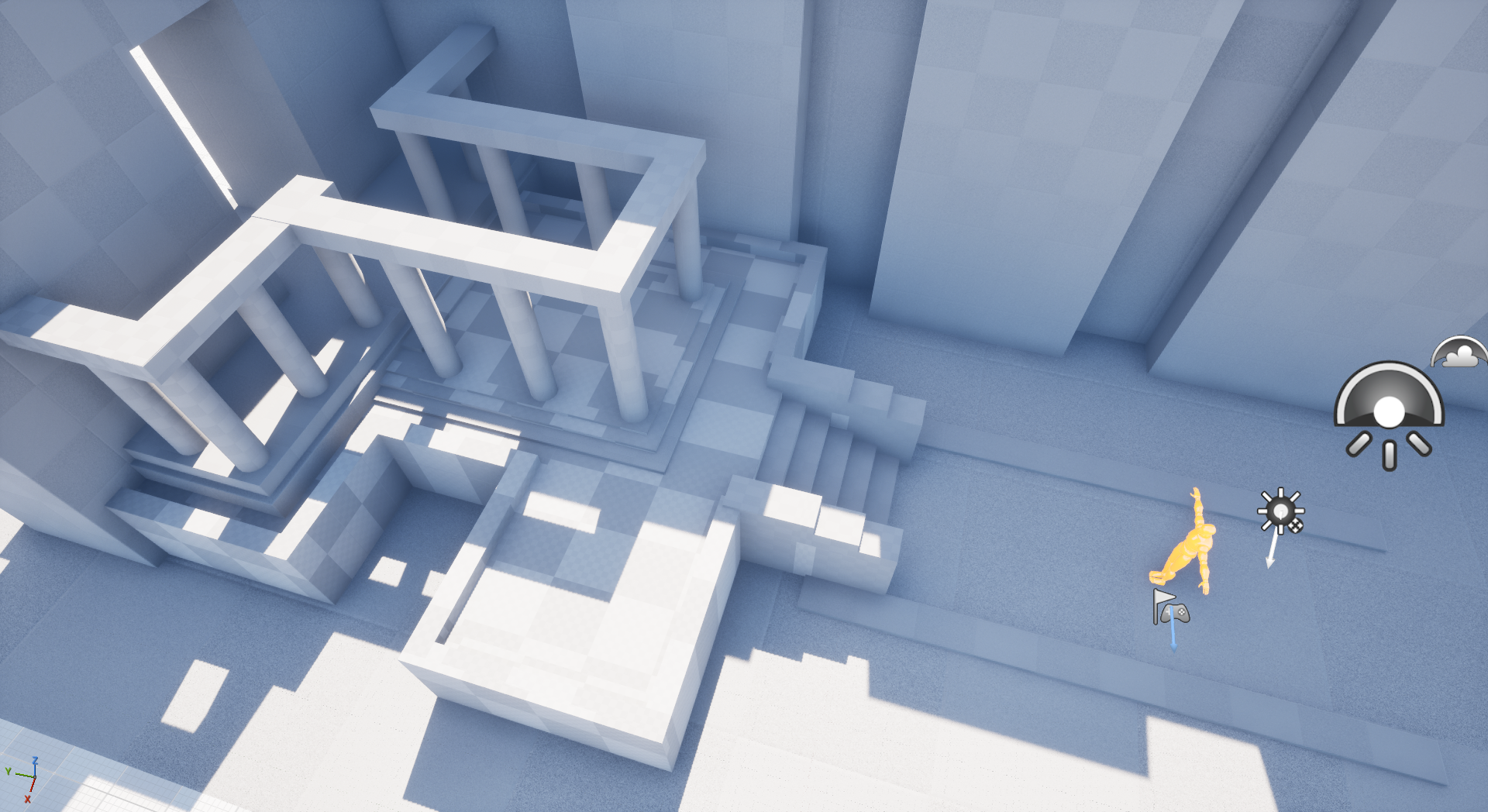

Ey this is my first time entering these challenges. I'll look for any feedback during my progress (●'◡'●). This is my first approximation for the blocking, it's not done yet since they are more little elements absent, and I wanted to get scale feeling first.

As an extra idea, I want to add some vivid element involving the pond. By the time, I sketch a little cascade path. I will explore a couple of ideas that aren't too flashy, since I don't want the pond element to fight the visual attraction of the main exit structure. ヾ(@⌒ー⌒@)ノ

As an extra idea, I want to add some vivid element involving the pond. By the time, I sketch a little cascade path. I will explore a couple of ideas that aren't too flashy, since I don't want the pond element to fight the visual attraction of the main exit structure. ヾ(@⌒ー⌒@)ノ

Rudy_Yair

Rudy_Yair

4 ·

Live 3d embeds!

This is pretty neat, you can embed a live real-time 3D viewer here on Polycount, using Sketchfab!

model

Instructions and details here:

https://polycount.com/discussion/160022/sketchfab-integration

Looking forward to seeing your models in some live embeds!

model

Instructions and details here:

https://polycount.com/discussion/160022/sketchfab-integration

Looking forward to seeing your models in some live embeds!

Eric Chadwick

Eric Chadwick

3 ·

Re: Don't Use AI for Replies

It blows my mind that no tool exists for AI-automated retopology.zetheros said:yea, disclosure that AI was used sounds good. As for the rest, I can really only speak to what I see and do on a daily basis. After a day's work of making 3d assets for my job, I spend an additional hour or more making art on the side, and this is what I've done consistently for the past 3 years. During that time I have yet to find a genuinely compelling reason to use generative AI.

People have completely jumped ahead into making shitty full AI-generated models which are ugly and completely unusable for anything, but nothing for retopology

3 ·

Re: Sketchbook: Fabi_G

Urgh, Long time no post

Here is a quick door I created today, using a photo I took on a walk some time ago

Sketchfab:

model

I think this it for the year - all the best, until next year :-*

Here is a quick door I created today, using a photo I took on a walk some time ago

Sketchfab:

model

I think this it for the year - all the best, until next year :-*

Fabi_G

Fabi_G

3 ·

Transformers - Soundwave

passion project for me as a transformers fan So here is my interpretation/reimagining of the classic Deceptions Soundwave.

Made in Maya and Render with Arnold

Main Renders:

Grey

Main Shots and Asset Breakdown:

Turntables:

Main

Grey

Made in Maya and Render with Arnold

Main Renders:

Grey

Main Shots and Asset Breakdown:

Turntables:

Main

Grey

7 ·

Re: Supporting Edges vs Bevels vs Creasing for 3d game art and cinematics?

You, personally can mix and match whatever methods you wanna mix and match.

In production, usually a common ground, a ruleset is defined, to make things interchangeable between artists.

You can use creases on anything you would use support geo for. Its very similar, you will usually just end with denser base meshes to control edge quality across your asset.

In case of sculpting, I'd argue that bases made with creases are preferable because it creates a more even mesh density while support geo tend to bunch up geometry around hard edges. Which makes sculpting over a pain usually.

As for your pictures, I'd assume the high poly to be baked down was done with creases, not the lowpoly itself. Possibly the lowpoly was made first. Then creases applied to generate a highpoly. Very possible to walk from low to high and bake it back down, rather than building a highpoly then retopo or optimize to make the low.

As about your workflow, again it is very similar. In a modifier based software (blender, max) its very much the same. Select edges to mark as sharp, either apply a quad chamfer or a smooth based on creases

Same same, but different

Can show some when on a PC

In production, usually a common ground, a ruleset is defined, to make things interchangeable between artists.

You can use creases on anything you would use support geo for. Its very similar, you will usually just end with denser base meshes to control edge quality across your asset.

In case of sculpting, I'd argue that bases made with creases are preferable because it creates a more even mesh density while support geo tend to bunch up geometry around hard edges. Which makes sculpting over a pain usually.

As for your pictures, I'd assume the high poly to be baked down was done with creases, not the lowpoly itself. Possibly the lowpoly was made first. Then creases applied to generate a highpoly. Very possible to walk from low to high and bake it back down, rather than building a highpoly then retopo or optimize to make the low.

As about your workflow, again it is very similar. In a modifier based software (blender, max) its very much the same. Select edges to mark as sharp, either apply a quad chamfer or a smooth based on creases

Same same, but different

Can show some when on a PC

3 ·

Re: The Bi-Monthly Environment Art Challenge | January - February (100)

I tried to do the stylized prop !

Everything was done inside Blender and it was my first time with texture painting. I keeped the polycount low (even if we can go lower).

I'm quite happy with the end result, even if it has some flaws here and there. Maybe I'll rework it later.

8 ·

Re: Sketchbook: Celosia

I had my computer go down for maintenance nearly every other month last year. Turns out a RAM stick was bad. And the GPU VRAM was also glitching. And a HD started to fail while another imploded. The mobo is also suspicious. Wonderful times. After RMAs and tweaking to stabilize it so it could limp ahead for a little longer I finally replaced it!

All that to say I progressed on the character above but the files are exiled somewhere else and I don't have the time to hunt them down and pick up from where I stopped just yet. But I have something else to show.

I joined the polycount challenge #100 and I'll be posting a collection of a few mini-breakdowns here so they don't get lost in time when the challenge thread closes, starting with the stylization of the locking mechanism.

The original concept is by Georgi Simeonov.

It's made from mirrored curves, using geometry nodes to create non-destructive randomization, with manual guidance from a couple of attributes. Using a faceted profile alone already creates instant stylization, and I'm pretty happy with the chiseled look without the need to break out the sculpting tools. It was faster than sculpting too, and those nodes are something I can reuse again and again in the future.

I'm going for a variable chiseled look for the lid and glass too, and will probably use the toned down version of the lock in the final prop. I want it to look more geometric than wavy and broken, and the edges treatment shouldn't deviate too much from the rest of the jar, which won't be as chipped.

I had this kind of geometric edge treatment in mind for the wires.

And I lifted the locking mechanism from a real jar.

It works like this:

I also did a initial unwrap with geonodes and resampled the curves using a tree I created for the hair cards project that decimates splines automatically based on angle/tilt/radius. I don't know yet if I'll try to use a simple quad profile with baked normals or actual geo for the low poly lock yet. These wires are pretty but they're hands down the most dense part of the prop. I don't know if they're worth all these tris.

All that to say I progressed on the character above but the files are exiled somewhere else and I don't have the time to hunt them down and pick up from where I stopped just yet. But I have something else to show.

I joined the polycount challenge #100 and I'll be posting a collection of a few mini-breakdowns here so they don't get lost in time when the challenge thread closes, starting with the stylization of the locking mechanism.

The original concept is by Georgi Simeonov.

It's made from mirrored curves, using geometry nodes to create non-destructive randomization, with manual guidance from a couple of attributes. Using a faceted profile alone already creates instant stylization, and I'm pretty happy with the chiseled look without the need to break out the sculpting tools. It was faster than sculpting too, and those nodes are something I can reuse again and again in the future.

I'm going for a variable chiseled look for the lid and glass too, and will probably use the toned down version of the lock in the final prop. I want it to look more geometric than wavy and broken, and the edges treatment shouldn't deviate too much from the rest of the jar, which won't be as chipped.

I had this kind of geometric edge treatment in mind for the wires.

And I lifted the locking mechanism from a real jar.

It works like this:

I also did a initial unwrap with geonodes and resampled the curves using a tree I created for the hair cards project that decimates splines automatically based on angle/tilt/radius. I don't know yet if I'll try to use a simple quad profile with baked normals or actual geo for the low poly lock yet. These wires are pretty but they're hands down the most dense part of the prop. I don't know if they're worth all these tris.

Celosia

Celosia

7 ·

Re: What Are You Working On? (3D) 2026

Marvel Rivals Nightcrawler fan art based on a concept by @ishaliart. I have a link to an original post you can keep track of below, right now I finished the model/textures and am getting into polish.

You can check out the original post to keep up with the progress and give feedback:

https://polycount.com/discussion/238067/marvel-rivals-nightcrawler-fanart/p1?new=1

You can check out the original post to keep up with the progress and give feedback:

https://polycount.com/discussion/238067/marvel-rivals-nightcrawler-fanart/p1?new=1

JGavinFischer

JGavinFischer

5 ·