The BRAWL² Tournament Challenge has been announced!

It starts May 12, and ends Oct 17. Let's see what you got!

https://polycount.com/discussion/237047/the-brawl²-tournament

It starts May 12, and ends Oct 17. Let's see what you got!

https://polycount.com/discussion/237047/the-brawl²-tournament

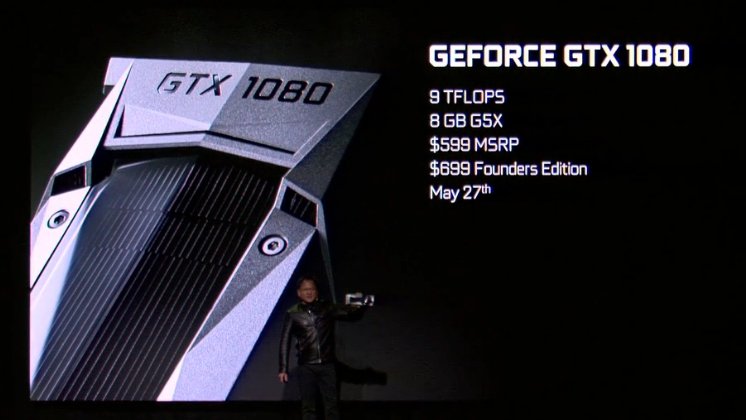

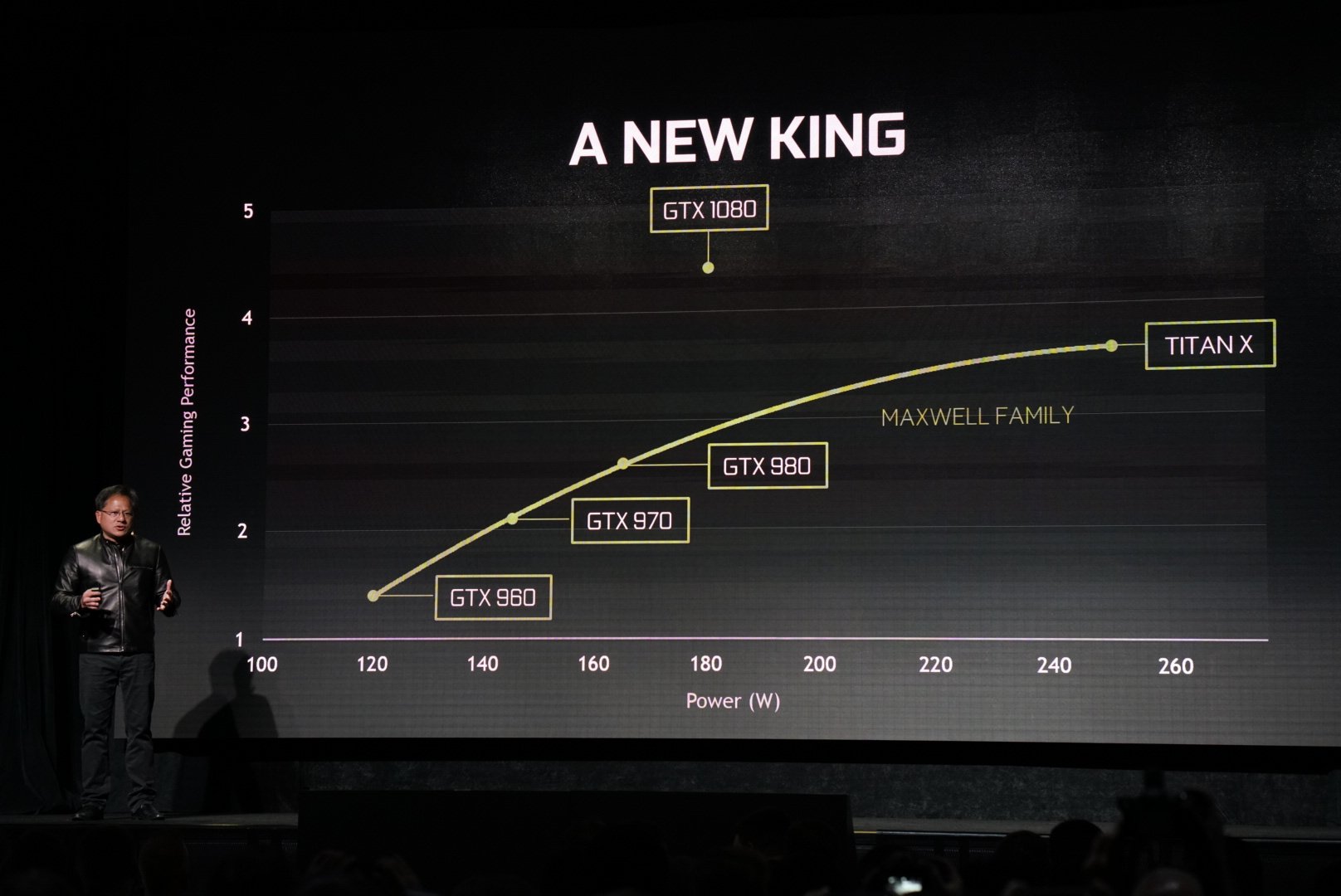

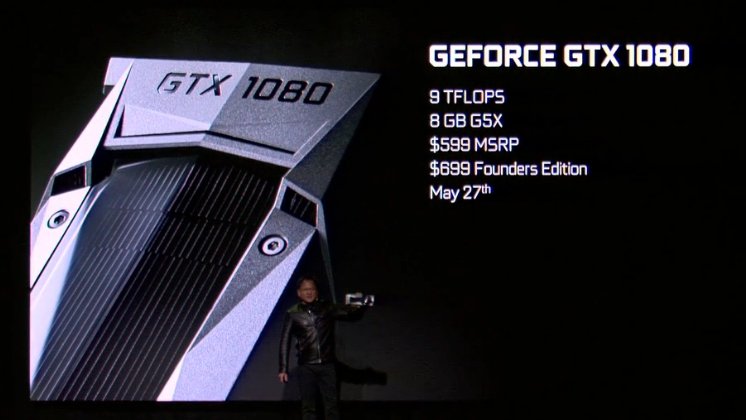

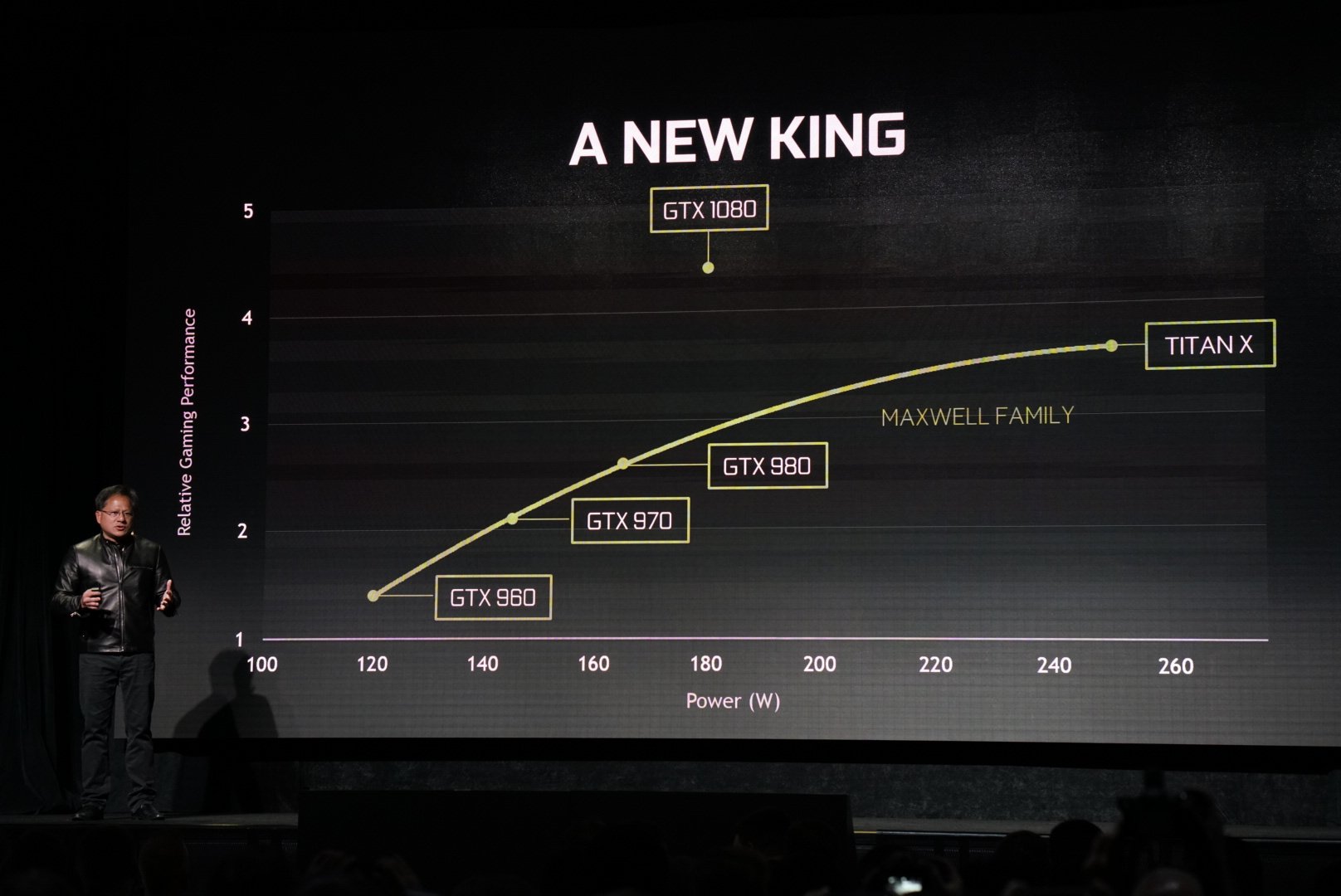

GPUs. Nvidia's Pascal: GTX 1070 and 1080 and what this mean's for the future.

http://www.geforce.com/hardware/10series/geforce-gtx-1080

With Nvidia's latest announcement, their new 1070 alone can outperform a Titan X while only costing $379. Pictures say much more than word's so I'll throw these around.

The bar is WAY lower for baseline VR, and what used to be higher end CG work. We haven't had a jump in graphics capability in YEARS with this kind of power, performance and efficiency.

Below is their performance chart released for the 1080 ($600)

With Nvidia's latest announcement, their new 1070 alone can outperform a Titan X while only costing $379. Pictures say much more than word's so I'll throw these around.

The bar is WAY lower for baseline VR, and what used to be higher end CG work. We haven't had a jump in graphics capability in YEARS with this kind of power, performance and efficiency.

Below is their performance chart released for the 1080 ($600)

Replies

The announcement of 8gb of faster (GDDR5x) VRAM is amazing. I think the 970/980 were a bit held back by their amount of VRAM, especially in Substance Painter/Mari.

Definitely interested in these; however, I am also curious how much longer we will have to wait for HBM 2.0 cards.

http://videocardz.com/59725/nvidia-gtx-1080-polaris-10-11-directx12-benchmarks

And of course, the benchmarks are so far off from being reliable, but just seeing the consistent flood of information of the ballpark frame rates we should expect is exciting. Id showed off Doom, supposedly at maxed out settings (guessing on a 1080p projector), at 120-200FPS. Those are some solid numbers, yet again "how reliable?", but the ballpark is exciting.

This tends to be driver issues and not hardware issues, the Nvidia reference cards (blowers) have been solid for quite a few generations.

This time around they seem to be calling the reference card "Founder's Edition" and charging $699. It now has some vapour chamber cooling, and certain power chips are above what is needed I assume for overclocking. The versions starting at $599 will be the brand specific versions, ACX, STRIX, Windforce etc... and won't necessarily have the better power delivery chips, but it isn't certain when these cards will release, so Nvidia is basically gouging $100 out of people who can't wait for the brand specific cards. Keep in mind that each brand will also sell founders edition cards, they are all the same thing just different warranties.

But also, if you are just modeling/texturing and want to put together a solid portfolio, a low end gaming GPU like the 750ti is fine.

I know Substance painter in particular can be demanding when working with 4k textures. Unreal Engine 4 can be very demanding depending on the scene, especially if you like trying new and experimental features. A lot of applications are slower when working with a 4k resolution. There's some bakers that are GPU accelerated, and things like iRay.

Also if you want to make 60 fps videos at 1440p of projects that you are working on, like I am, a GTX 760 isn't cutting it.

But maybe in 4 years with the 3rd gen of VR hardware there will be an actual need for 4k textures.

4k-16k textures are used all the time for movies though.

People around here are more excited for the latest GPUs though because it'll allow them to do more in game engines (like UE4) and 3D asset creation software (Max, Maya, Modo, Blender, Substance Painter, etc.)

what you probably think of is final frame rendering for film and movies, special fx... that is probably still a good deal using CPU, but we are not talking a single PC here, but entire rendering farms. Reason is that the frames for pixar and whatnot are very complex and need tons and tons of memory. However, even for film there is a trend to use more GPU in the pipeline, especially to support the individual artist getting an image that is closer to the final shot and allow quicker edits and previews.

http://on-demand.gputechconf.com/gtc/2016/video/S6454.html (Pixar presentation at GTC 2016 on their animation tool)

http://on-demand.gputechconf.com/gtc/2016/video/S6844.html (Pixar presentation of their preview lighting/material editor)

As for final frame rendering NVIDIA presented a fast interconnect called NVlink for their server-cards (Tesla) which allows much faster connection between chips and therefore also allows to increases total fast memory for the future.

Blizzard switched to redshift and there are several other doing fx with GPU based farms...

https://www.redshift3d.com/blog/blizzards-overwatch-animated-shorts-rendered-with-redshift

can't wait

Also in real world examples, it is very noticeable if you use a 2k texture on a two handed first person weapon instead of a 4k one.

@ThreadTopic, just switched to an octa core i7 5960 Extreme Edition @4.3 gz overclocked, costs 1000 retail, and is double as fast as my old one for 3D rendering, and still the CPU is so much slower than the GPU in rendering tasks, its unreal. Im now switching to Octane, which is a GPU based pathtracer, and rendering the same sprite animation took 17 minutes on the i7 5960X and like 50 seconds on the 980 Ti, the difference is unreal. With 2x the new released 22 core CPU from intel for servers I could get this down to like 8 minutes, and a single 660 dollar 980 Ti would still be 10 times as fast.

So im getting myself some nice double 1080s I think for that sweet offline rendering power. Real time viewport, hell yeah.

Dude unless the coin is a level, it would in no way need a multi 4k, and even in that case, you would treat it like terrain or use detail textures like @ZacD mentioned. Assuming each side is a 4k map you don't begin to see fidelity break down until the coin is wider than a 4k screen.

Sure we as game artists might notice these things because we go hunting for that shit when we play games, but at this point where the majority of people still game on 1080p a jump from 2K to 4K isn't that noticeable.

I never said they don't have a benefit, but my point with the resolution that most people are using right now, and texel density having been tuned to that resolution, be it by actual unique textures, tiling or detail textures the jump from doubling texture size isn't that apparent. It is even less apparent due to the fact that games tend to be in motion a lot of the time.

When 60%+ of gamers are on 4k screens (and the right distance from said screens to even notice the difference between 1080p) then you can call such a statement foolish.

And there's unique situations like space games that can take advantage of there not being a lot besides a skybox and ships. But if I remember correctly Star Citizen uses mostly trim and detail textures and not one big sheet.

Probably with 16 gigs of vRam, although I don't know if you truthfully will need anything more then 16.

Anyone watch the nVidia presentation, the girl screaming; I can afford that ?

I'm excited about this. I'm curious what it means for Max viewport. Zbrush. And v-ray rendering. Cuda cores? And of course VR. But dev performance is what I care about most if I'm going to upgrade. I know VRay is mostly CPU, but i'm curious if going from a top end 7 series card to a 1080 would help with just about everything.

But I drool at the thought of Substance performance on a 12GB Pascal

Yeah, tons of people have been really hating on her and wishing she died, but I loved her comments. I really enjoyed myself listening to the various screams from the crowd

Honestly, people hating on this anonymous women !

I found her funny, I was anticipating more of her screaming comments in the presentation. If she didn't get a 1080 at the presentation, she should have on the spot. For all we know she could have been, I dunno, Natalie Portman.

It was exagerrated but it clearly depends on what you are planning to do.

Also my examples were not the point. Generalizing things like "Nobody needs 4k textures" and "We may need 4k textures in 4 years with VR" is nonsense, as they are being used for years, while you clearly can see the difference between a 4k and a 4x lower density 2k texture. Also the logic behind is flawed, as you can never know what you are allowed to use unless you take the whole scene into account.

I could be taking one 128k texture for a full game, or 100000 256 textures, how can one draw a line there somewhere, it makes no sense.

I see tons of people whinging about texture resolution when they don't realize actual usage / don't layout UVs well / don't even have sharp textures in the first place. They need to sort that stuff out before before wanting to bump up to another resolution level.

As far as games go the line is the resolution of the screen and how large objects will typically be on screen. For movies it is different especially these days with new display technologies like HDR and 8k resolutions so these things are authored for the future. Games are forced to make due with the technology that is generally available, so if there isn't that much of a difference with increased texture resolution they will put the resources to better use. It's like having a DSLR with a 100 megapixel sensor but you have some shitty lens that isn't able to resolve all that detail, until you get a better lens you might as well shoot 25 megapixels.

How can anyone say 2k is fine and 4k is not without knowing how its used ?

After this logic, using 4 x 2k textures on a character is fine, but one 4k is too much, because the name has a 4 in it ?

You can pack only one piece of an object or millions of objects into one texture. Rage used one single 32x32k. You just cannot judge this.

Its like saying "Dont ever spend more than 1500$ on any object in your life", Its just nonsense.

Im repeating myself here, but this can not be generalized at all and makes no logical sense trying to.

Rage never has a 32k texture loaded into memory, it streams smaller chunks of that texture in as you move through the world, you may argue that the engine treats it as 1 big texture, but most engines these days do texture atlassing and see our many textures as one big texture too.

When people ask how many polygons should X be, the answers tend to be "Enough". Paying $1500 for an object that is worth $750 is nonsense, just like modeling something that is 100,000 polygons that only needs to be 10,000 is nonsense and finally like using a 4k texture on an object that only needs a 1k is NONSENSE.