[Blender 2.8] Bevel shader normal map baking artifacts on flat surfaces

Hello, everyone.

I decided to try out Blender 2.8 bevel shader for Low-poly normal baking. While the results seem to be quite impressive, I get some artifacts, mostly on flat surfaces, which are seemingly caused by High-poly topology.

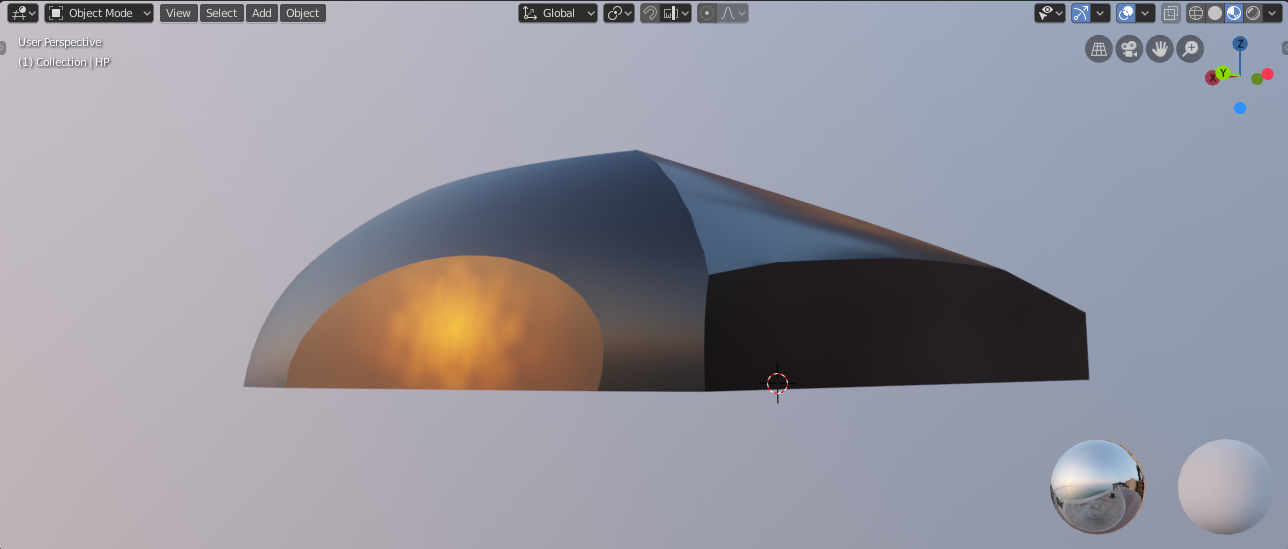

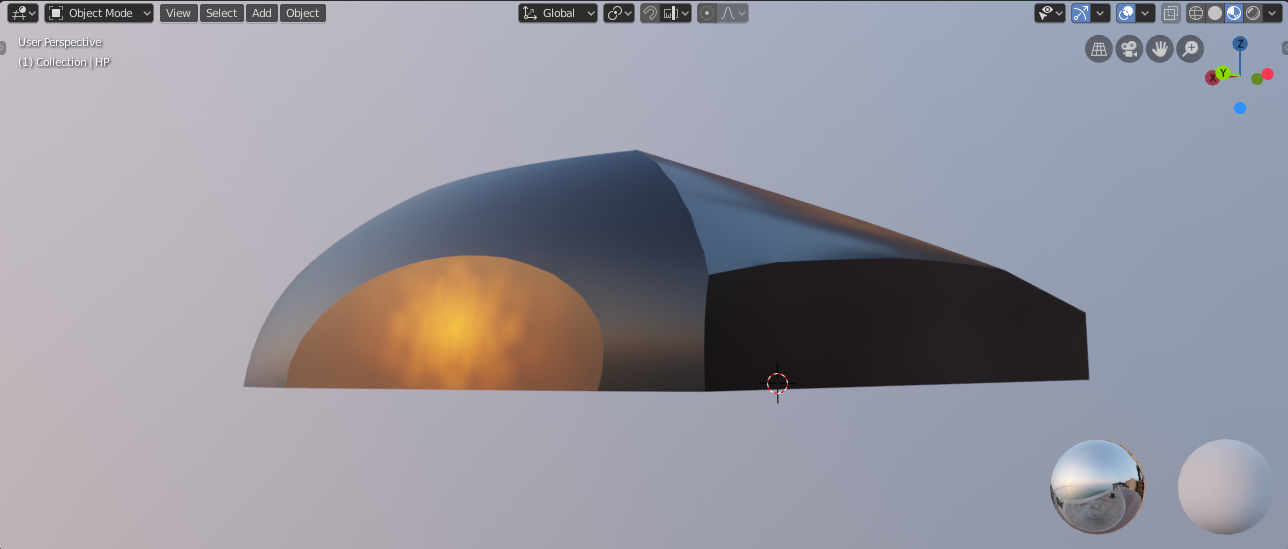

Here is how High-poly looks without bevel shader applied:

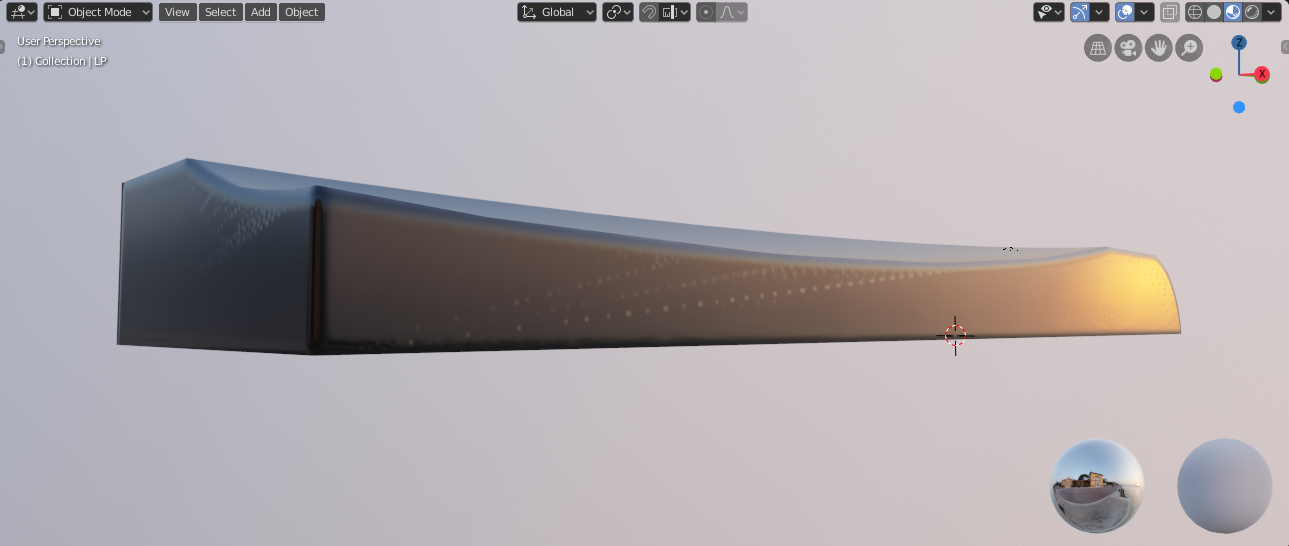

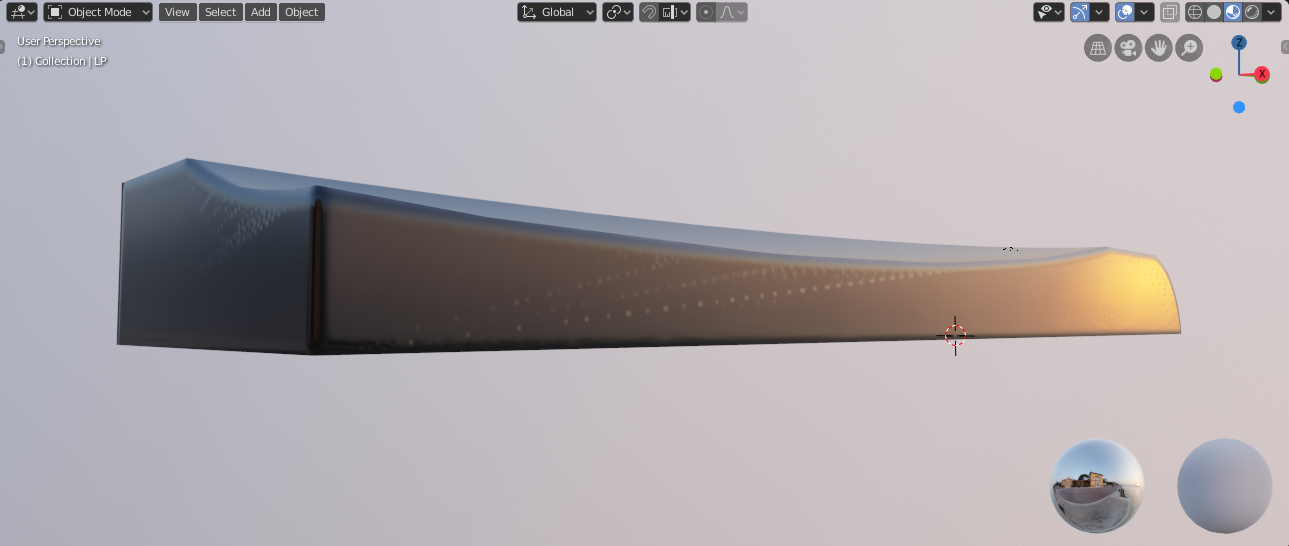

Here is the Low-poly:

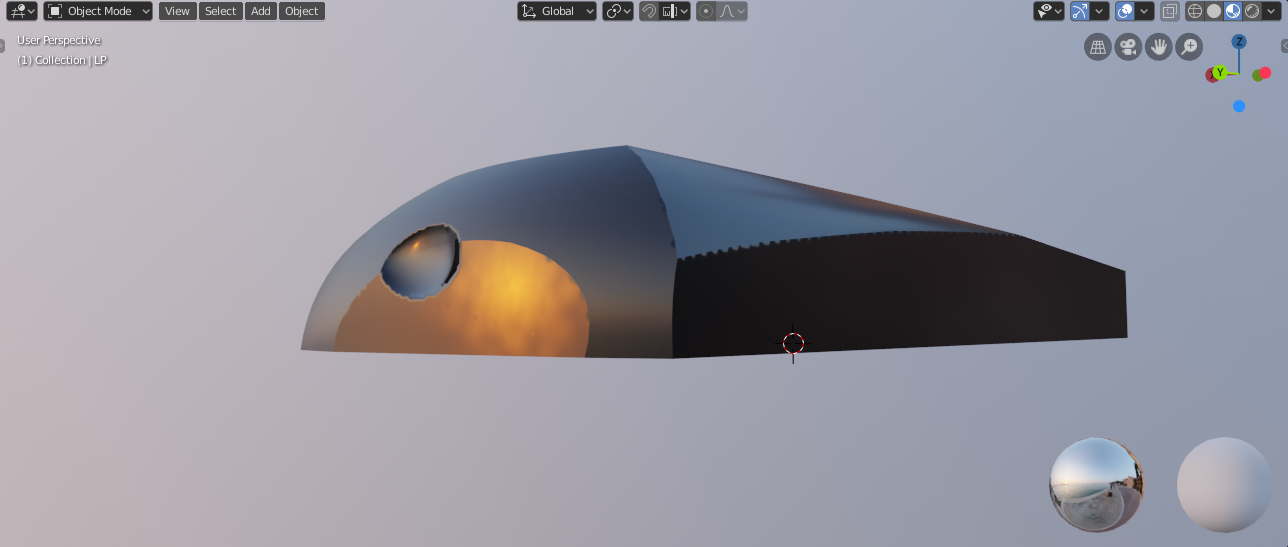

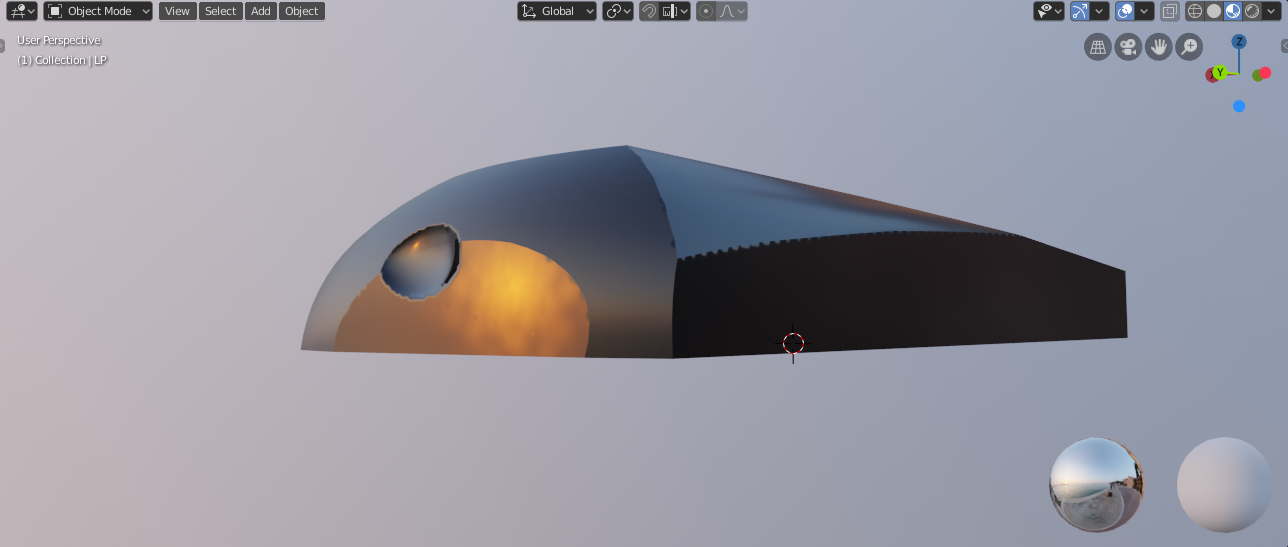

Here is the Low-poly with baked normal map (Baked from HP with bevel shader) applied:

As you can see, there is these random lines showing up for some reason.

Is this a known bug with the bevel shader? Or is there something else that I'm missing?

Also, baking normal map using same models but without applying bevel shader does not result in aforementioned artifacts.

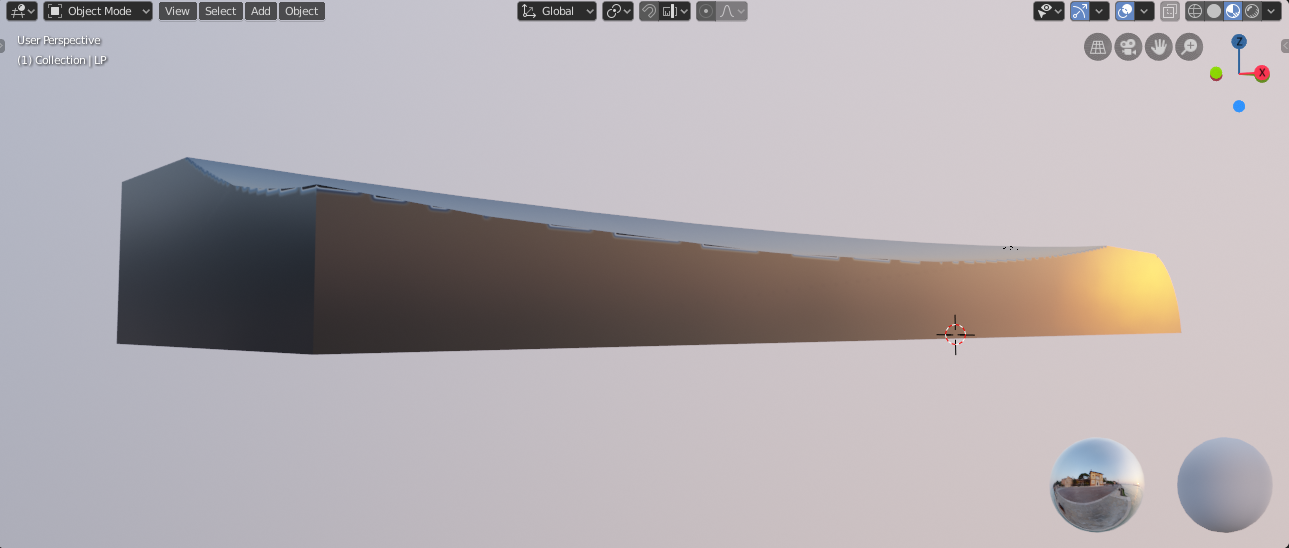

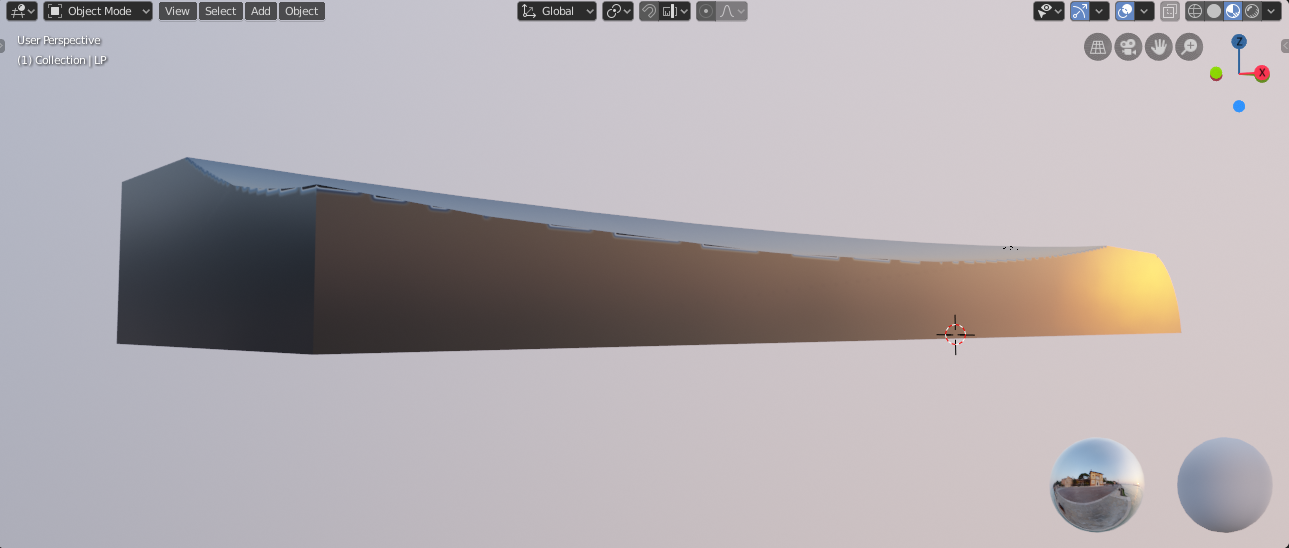

Here is the Low-poly with normal map baked from High-poly without bevel shader applied:

Any help is greatly appreciated.

I decided to try out Blender 2.8 bevel shader for Low-poly normal baking. While the results seem to be quite impressive, I get some artifacts, mostly on flat surfaces, which are seemingly caused by High-poly topology.

Here is how High-poly looks without bevel shader applied:

Here is the Low-poly:

Here is the Low-poly with baked normal map (Baked from HP with bevel shader) applied:

As you can see, there is these random lines showing up for some reason.

Is this a known bug with the bevel shader? Or is there something else that I'm missing?

Also, baking normal map using same models but without applying bevel shader does not result in aforementioned artifacts.

Here is the Low-poly with normal map baked from High-poly without bevel shader applied:

Any help is greatly appreciated.

Replies

In fact, Cycles render does not produce these artifacts when rendering just the High-poly. It seems that only baking is affected.

Here is the Cycles render of the High-poly with bevel shader applied:

P.S. Initial screenshots are just a test bake, normal map resolution was set to 1024x1024px, but just for reference I tried baking 4k 32bit float map and the issue was still there.

Seems like a bug to me honestly, or maybe some precision issue?

Still, I'd want to know if anyone found a way to avoid these issues without fiddling with High-poly topology.

It is said weird people from Ireland might know of this

And the low is no different :

I understand the sentiment of "if it looks good in a render, then it should bake okay" but here this is so extreme that it makes the test basically pointless imho.

The artefacts you are getting have basically nothing to do with the round edge shader, and everything to do with the models themselves.

So, viewport renderer as well as Cycles are both able to render individual models without an issue but fail when trying to bake? I don't know, but to me it doesn't sound right...

Another thing, I forgot to mention that I needed the normals to be constructed from smoothing groups, so what I did is right after importing I clicked "Clear Custom Split Normals Data" (under Object Data -> Geometry Data) both for HP and LP models. I'm unsure whether it is the correct way to do so in Blender, but without this it seemed like the whole model was smoothed which obviously resulted in horrible shading. Anything wrong with these steps maybe?

model

Here is what it says:

Note that this is a very expensive shader, and may slow down renders by 20% even if there is a lot of other complexity in the scene. For that reason, we suggest to mainly use this for baking or still frame renders where render time is not as much of an issue. The bevel modifier is a faster option when it works, but sometimes fails on complex or messy geometry.

And even if you *do* plan to bake from such highs to such lows for a pipeline reason (CAD/Nurbs to game engine), going bruteforce into it at this time won't give you the full picture - hence this thread.

It would make much more sense to first try to bake from this messy high down to a clean, manually crafted low. It may not give you the whole be-all-end-all solution to your inquiry but at the very least by doing so you'd be manipulating only one variable at a time (messy high + clean low), as opposed to two variables like you are doing now (messy high + messy low). One variable at a time is the only way to debug an issue.

Without doing this test (clean low) for now you'll have no way to know if the artefacts are caused by either the low or the high since you can't isolate either. Which is precisely why you are not getting anywhere near to an answer at this time

On top of it all, clean lows will end up being a requirement for performance anyways if your goal is an interactive realtime presentation. So by *not* doing a clean low test, you might be setting yourself up for later problems too.

To make myself a bit more clear, yes, these artifacts seem to be related to High-poly geo, I tried cleaning it up a bit and while it helps in some areas it introduces same artifacts in others. Since the root cause of this is unclear to me, If anything, cleaning up Hight-poly seems unreliable. I mean, what changes exactly should I make? This is not to mention that extensive cleanup is somewhat defying the intention of this workflow.

I really appreciate your input, but "Your geo is bad, hence the problems" kind of response doesn't help much. If you are sure that this particular geo is the problem, could you please elaborate on how exactly does it affect the baking process in a way that causes these artifacts?

To sum it up one more time, Low-poly renders without artifacts on a completely flat surfaces, same as High-poly with bevel shader applied. If my understanding of general raytraced baking is correct, the expected result here is a flat colored normal map in these areas. So, to me it seems like either I'm doing something wrong when setting up a bake in blender or there is something wrong with the blender's baking process itself.

Also, I updated Sketchfab scene with a comparison between different bakes, as well as cleaning up low-poly (it makes no difference tho as expected):

For your high : you need to find a way to trick your CAD program so that it doesn't output such extreme triangle fans. The fact that the model renders fine without the bevel shader is *irrelevant* since you know already you want to use it. So you need to either tweak your exporter settings, or maybe even simply slice the object a few times along its length to provide more edges. Or who knows - maybe the round edge shader will get an update addressing this extreme case. But betting on the future is always risky.

For your low : regardless of the results you manage to get out of this specific test, I can guarantee you that relying on this kind of extreme fan geometry will cause issues at some point way beyond the scope of this test. This is the cornerstone of realtime art : clean, carefully crafted geometry is always the winner. I understand the sentiment of wanting to cover all your bases with this test and figuring out everything out from it, questioning everything. But at some point it's also important to rely on the good practices developed by veteran artists over years (decades) or working with this kind of stuff. While it wouldn't really matter for still renders, it matters a whole bunch for realtime content.

Also, while admittedly being a good worst case scenario in the case of this simplistic shape, this test is far from covering every possible shape you'll likely end up building and processing - so who knows what other surprises you'll run into with that CAD exporter. Better playing safe imho by at the very least spending the few minutes needed to craft the low carefully.

Thanks for trying this out!

Well, full disclosure, I'm actually using Ben's workflow with ZBrush step replaced with Blender bevel shader approach here

Well, I don't expect it to "just work". As you have noticed yourself, it handles this geometry without any troubles when rendering just High-poly with bevel shader applied. I would not have any questions otherwise.

P.S. Now when you mentioned it, I'm curious to see MODO results with this same geo...

Let's get this out of the way

As for the High-poly I think you are missing the point here, it renders perfectly with bevel shader applied outside of baking scenario. Also I already mentioned that I tried cleaning it up and while it helps, it does not eliminate the problem completely, at least in my case.

It sure is

My question can be brought down to "Is this behavior of baking in Blender expected? And if not, what can possibly be done to avoid the issues without modification of High-poly geo". I hope this makes it more clear.

Well, I'd consider automatic optimizations like that to be acceptable here since that does not involve extensive manual cleanup, but yeah, the results still have issues. And I believe the reason is that these triangles initially come from triangulation that is performed before exporting the mesh, therefore the only optimization here is coming from collapsing vertices. Even at angle setting of 0.1 I can spot difference in shading making this solution far from ideal.

When using Max ProBoolean there is an option to "Make quadrilaterals" which significantly reduces these long thin triangles, but that unfortunately still does not eliminate the artifacts completely.

Unfortunately I don't have those anymore

I think I'll try filling in bug report at this point and see where that will go.

Back to the artifacting:

Fun fact, found this statement in docs regarding baking (https://docs.blender.org/manual/en/latest/render/cycles/baking.html):

Regarding the issue, after trying to alter render settings it seems like lowering global render samples to 1 removes the artifacts completely while also making the result very noisy. Noise can somewhat be reduced by cranking Bevel node samples to 16 and rendering at high resolutions then downsizing manually. The bevel effect itself still looks a bit more noisier up close than what is achieved with default settings so this solution is still not ideal. Here is what it looks like with normal maps applied (Samples here are Bevel node "samples" property):

Now, considering this, I think cranking Bevel shader samples higher than 16 could potentially be a solution here? However it is currently capped at 16. I tried using a console to raise it beyond this value but without a success.

https://developer.blender.org/diffusion/B/change/master/intern/cycles/kernel/shaders/node_bevel.osl;26f39e6359d1db85509a0ee1077b6d0af122a456

This seem to leverage actual bevel shader via some weird hack. Now, there is no checking of the input values like in the actual Bevel node therefore it is possible to raise sample count higher than 16.

So, I used this code via script node in place of Bevel node and it looks like my assumption about Bevel samples was correct, resulting normal map is perfect without any artifacts so far at 1024 Bevel samples. Here is an example (Baked at 1024px, 1 Render sample, 1024 Bevel samples):

This also bakes roughly 2 times faster at 1024px on my machine (vs 128 Render samples, 16 Bevel samples)

Another observation is that there is seemingly no loss of quality when lowering bevel samples while baking at higher resolutions (Baking 2048px map with 512 bevel samples produces perfect result) so it should potentially scale well to such use cases. So far this approach looks superior over the default one in every way.

Attaching .blend if anyone want to try this out.

@Justo

I hope this post covers your questions

Now ... at the risk of sounding like a broken record, I would still encourage you to test things further on something that looks more like an *actual* prop for your project. The fact that you manged to get rid of these artefacts on this test shape is fantastic, but we are still talking about 1024+ texture used to capture the surface of an extremely simplistic shape which really doesn't warrant it ressource wise.

Note that I am very interested in this kind of stuff, as I am strong believer in approaches not relying on manual handling of rounded edges and the likes. But, performance as well as practicality could very well dicate you to use a different approach altogether (weigted normals without normalmaps *at all*, for instance).

I guess what I am trying to get at is : while it is great that you worked around the issue revealed by these CAD models, you might want to still keep your guards up (and an open mind) in relation to what comes next for your project. I hope this makes sense !

(IIRC this OSL version of the rounded edge shader has been around for a while, at least .... 2 years or so ? I remember experimenting with it quite a bit, fun stuff.)

Well, I can't say for sure, but from quick look at a code it might have something to do with Render samples parameter being used for different purposes when baking compared to usual renders (It is mostly referred to as "AA" in a baking context). In any case, I reported this as a bug, so maybe devs will see what is really going on there.

Thanks

The soft limit is still 16 samples, but if you manually type the value you can use up to 128 samples. No need to use OSL shaders so it is possible to render on the GPU.