The BRAWL² Tournament Challenge has been announced!

It starts May 12, and ends Oct 17. Let's see what you got!

https://polycount.com/discussion/237047/the-brawl²-tournament

It starts May 12, and ends Oct 17. Let's see what you got!

https://polycount.com/discussion/237047/the-brawl²-tournament

Re-ormalisation - 1 map, 4 applications and 4 results, Which is correct?

Hey all,

I was re-normalising a normal map today and noticed that you can get different results depending on which program you use to normalise with.

I rendered the Base map in Blender 2.57b and then re-normalised copies of it in Photoshop with the xNormal and Nvidia plugins. I then loaded the map in CrazyBump and exported it without making any changes, and all the results are different.

It was my understanding that a map should be normalised automatically when rendred and therefore, any re-normalisation shouldn't change anything as all the normals already have a uniform length of 1.

If re-normalisation is a mathematical calculation to give all the normals a length of 1, then they all cant be correct can they?

If they all show different results, then surely people cant trust them until they know that "Program X" is the most accurate?

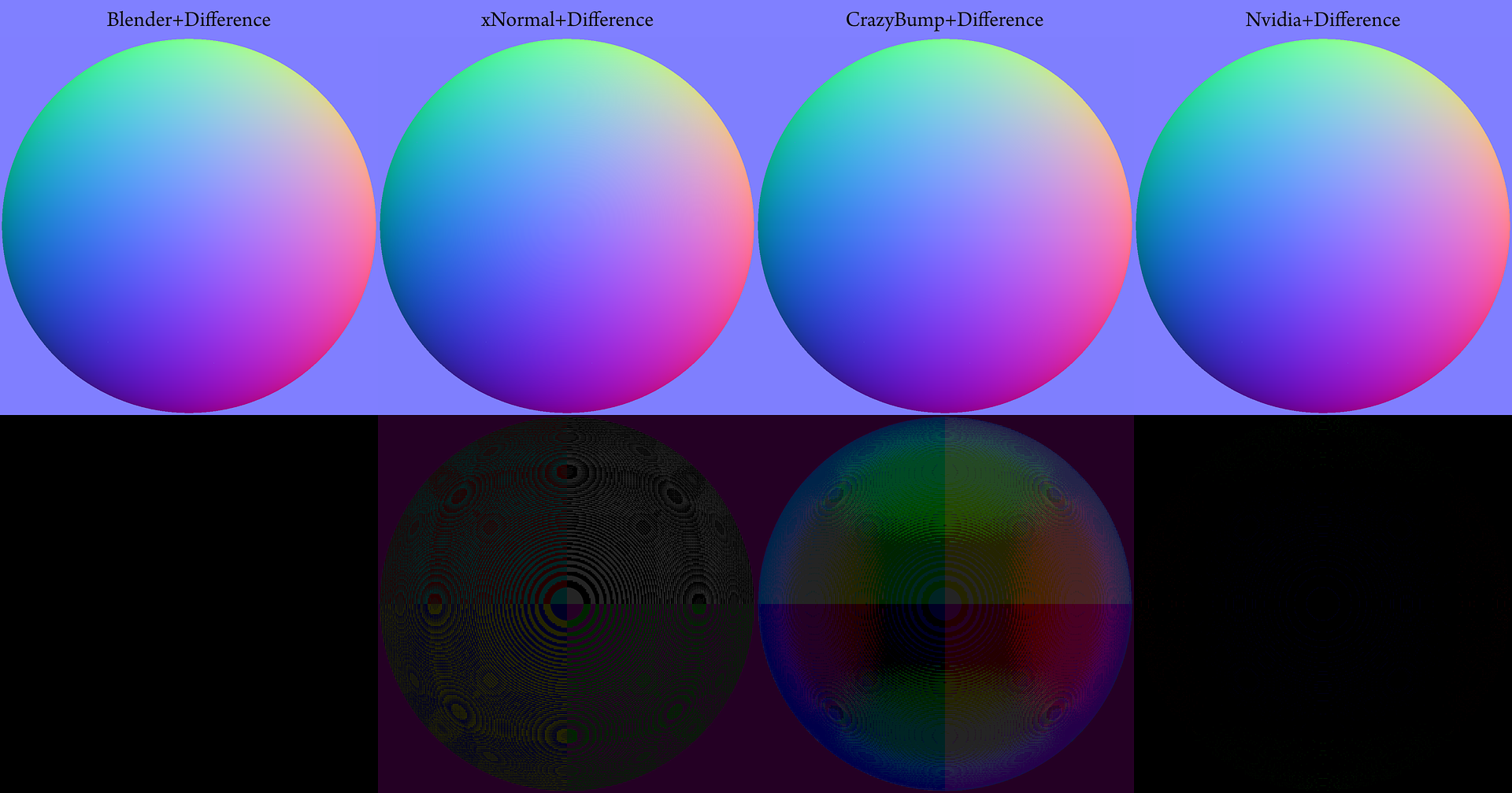

Here is an image i made to show the differences between Blender, the xNormal PS plugin, CrazyBump and the Nvidia PS plugin. I used a difference overlay of the normalised maps over copies of the original Blender bake to show the differences between each application (with a levels to show the differences more clearly).

I know we can all use our judgement to choose the most pleasing results, but doesn't defeat the point of re-normalisation somewhat?

Any ideas?

I was re-normalising a normal map today and noticed that you can get different results depending on which program you use to normalise with.

I rendered the Base map in Blender 2.57b and then re-normalised copies of it in Photoshop with the xNormal and Nvidia plugins. I then loaded the map in CrazyBump and exported it without making any changes, and all the results are different.

It was my understanding that a map should be normalised automatically when rendred and therefore, any re-normalisation shouldn't change anything as all the normals already have a uniform length of 1.

If re-normalisation is a mathematical calculation to give all the normals a length of 1, then they all cant be correct can they?

If they all show different results, then surely people cant trust them until they know that "Program X" is the most accurate?

Here is an image i made to show the differences between Blender, the xNormal PS plugin, CrazyBump and the Nvidia PS plugin. I used a difference overlay of the normalised maps over copies of the original Blender bake to show the differences between each application (with a levels to show the differences more clearly).

I know we can all use our judgement to choose the most pleasing results, but doesn't defeat the point of re-normalisation somewhat?

Any ideas?

Replies

What is also a little curious, is that the tangent basis of Blender 2.57b is now identical to the tangent basis of xNormal 3.17.5, yet xNormals plugin still changes the maps values when re-normalised.

http://wiki.blender.org/index.php/Dev:Shading/Tangent_Space_Normal_Maps

If both programs are using an identical tangent basis, then they should have identical results, even when re-normalised.

I wondering if Jogshy updated the xNormal plugins to reflect the new tangent space (if it needed updating, that is :P)

On the other hand, I use single floating point numbers but any other program can use double floating point numbers ( I don't like double precision, it's a memory waste but may be useful if you need extra precision ).

Another possible cause for the differences may be the "flat normal" value. Some say it's [127,127,255] while others prefer [128,128,255].

But the story does not even stop here: the pixel shader you used to paint that sphere matters too. It can use a HLSL/GLSL's fast_normalize()/half instruction or, on the other hand, the normalize()/float function. It's even more complicated: old cards (ps<2.0) used fixed point math to compute the colors so a normalize() instruction won't return the same result than a ps>2.0 instruction.

Graphics drivers's setting ( program profiles or the texture mipmap quaility/filtering/AA sampling/compression settings ) may alter the data too.

The framebuffer's format may also affect the result. Some GPUs use X8R8G8B8 while other use X2R10G10B10.

Conclusion: each GPU and program works differently ( even using the exact same input tanget basis ) but it should not really matter: the differences are too small to cause a headache really. Just assume there's no equality in the floating point world and take always into consideration a small error ( aka epsilon ).

Lordy, aren't those a nightmare...

@Jogshy,

That makes sense...Thanks for clearing everything up.

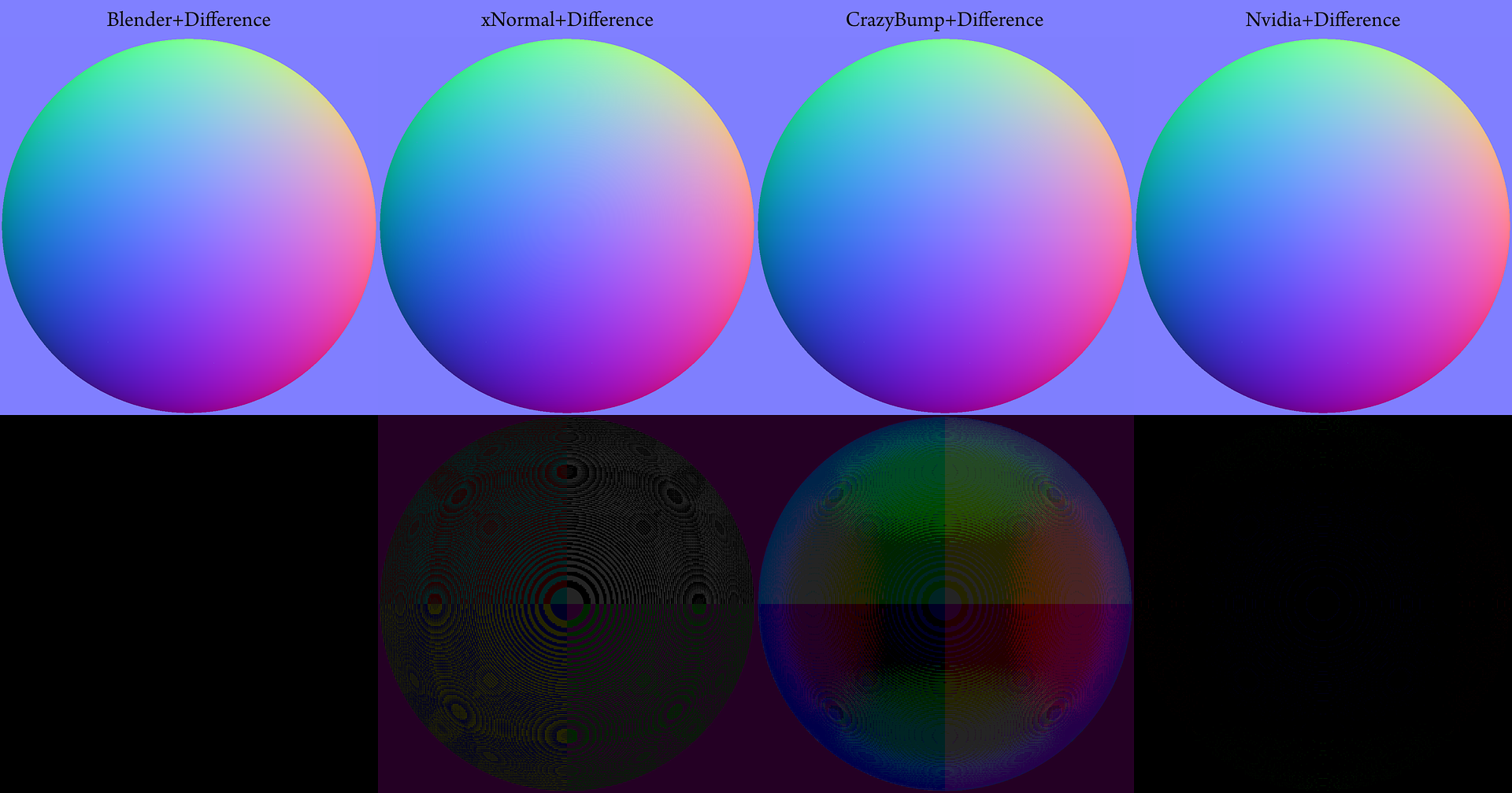

The re-normalisation differences are actually visible when i use the image above in Marmoset, but i guess there isn't much that can be done about that. (unless you can think of a solution?)

See the pic below to see what i mean.

(ignore the odd Y shaped artifacts on the top right of each sphere...it looks like its a problem with Marmoset, which im about to report)

Thanks again!

Thanks for the replies guys

The maps are applied to a flat plane with planar UV's, so i think Eric must be spot on with thinking that it is something to do with the cubemaps. (They also move when the sky is rotated)

I posted about it on the Marmoset thread, so hopefully the 8ml guys will have a concrete idea