Free Maya Shader: Easy normal map edition

Hi everyone

Here's a little something I've been working on. As usual I'd like you polycounters to test it out

There's numerous tool out there in various package that allow you to transfer maps from one UV set to another. Unfortunately it never works with normal map and you end up rebaking most of the time.

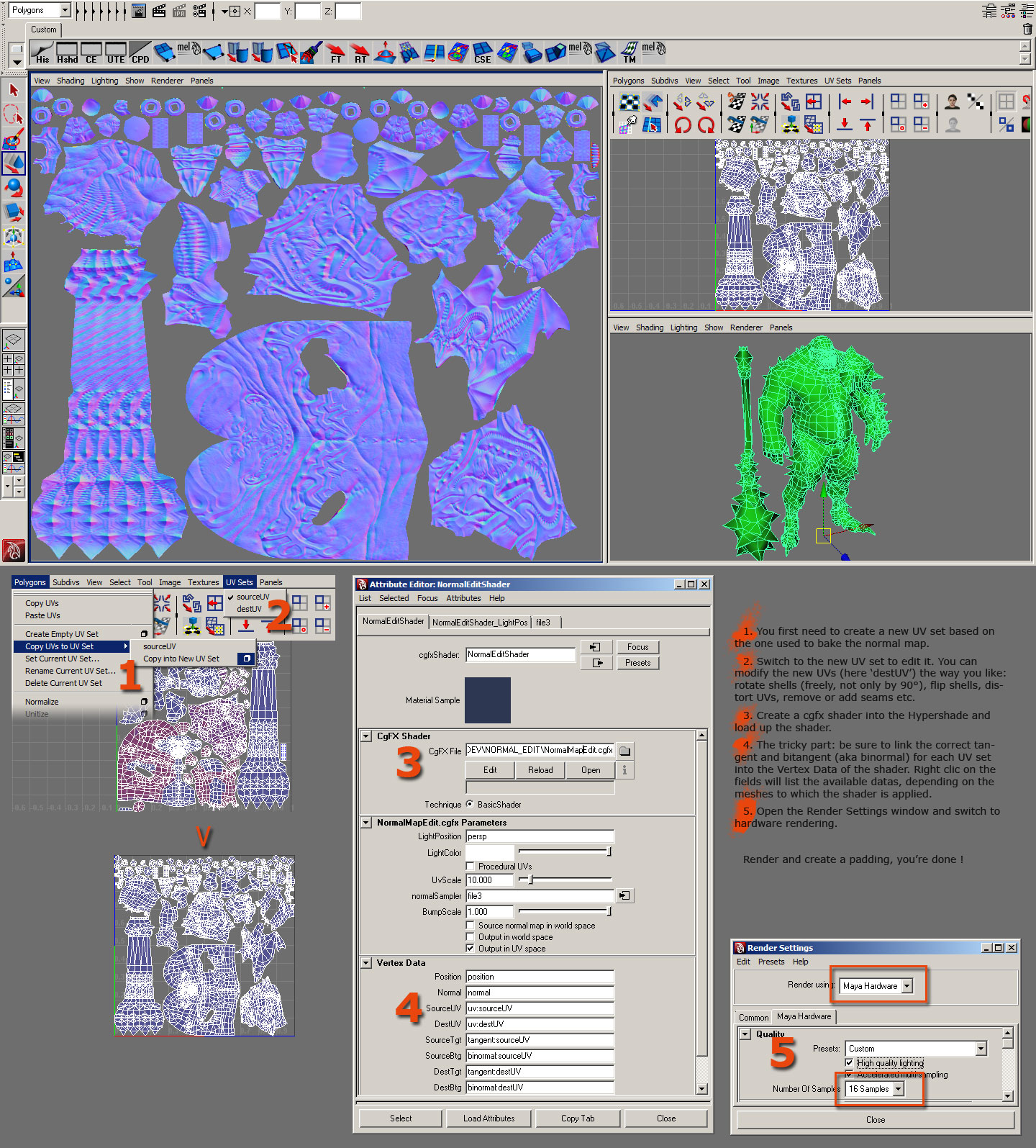

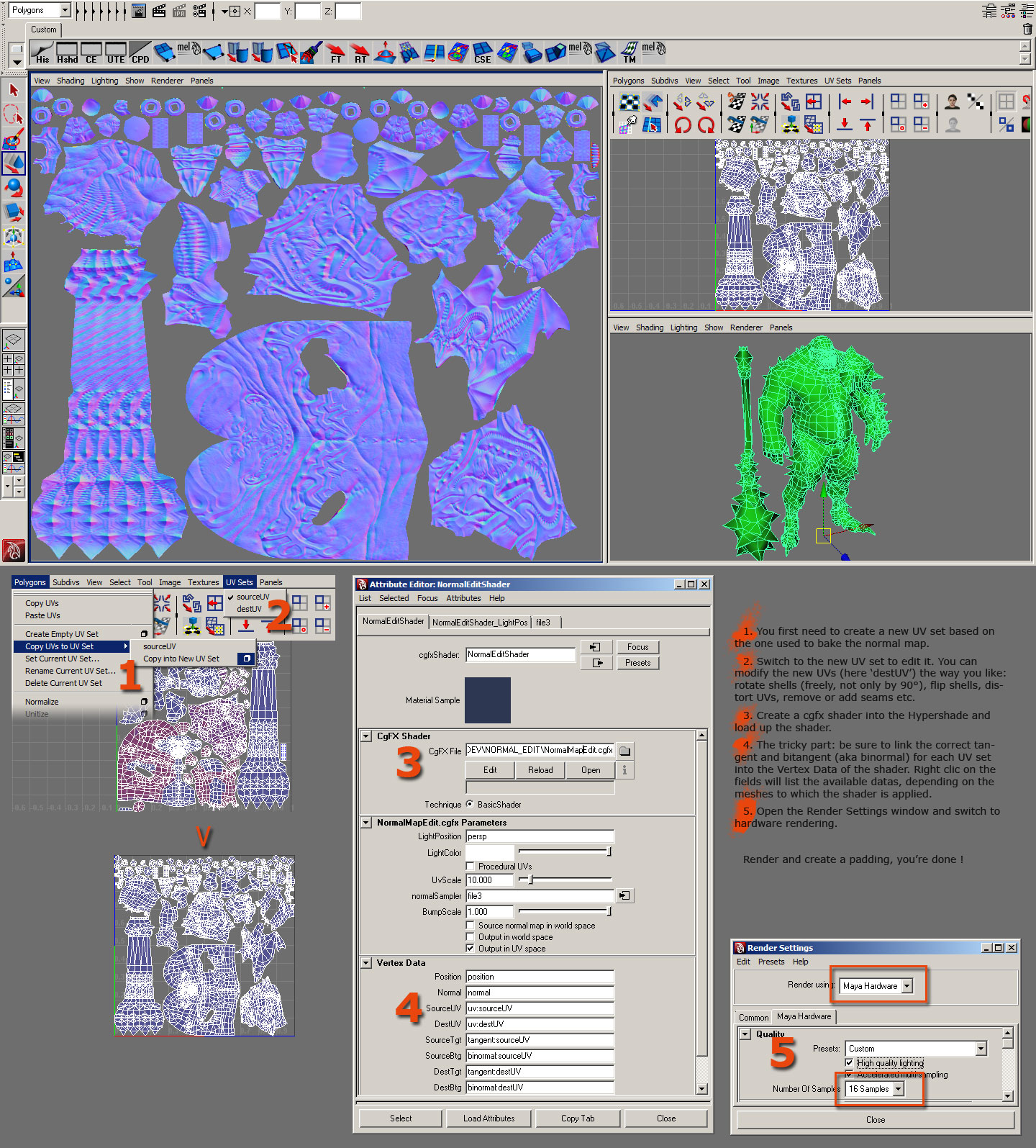

I developed that simple technique that will allow you - just using your lowpoly and a normal map - to transform that normal map from/into world space normal map, rotate and distort UVs, all in realtime.

You can download the shader there: http://www.mentalwarp.com/~brice/download/NormalMapEdit.cgfx

and have a look at this short tutorial:

The main problem is that the output has no padding, but you can easily recreate those using common photoshop scripts (xNormal has one right?) or even the warp image itself in maya.

If you only need to convert a normal from world space to tangent or the opposite you just need to link twice the same vertex data

The shader alternatively allow you to use the normal map with no initial UVs but to generate them on the fly using a tri-planar projection. Especially useful to bake a tiling pattern all over a complex shape.

I used Dmitriy Parkin's awesome model for DWIII, thanks to him you can dl on his website

http://www.parkparkin.com/personal_art/imrod.htm

Enjoy ! and feedback please (only tested on maya 2008, 9800gtx+)

(only tested on maya 2008, 9800gtx+)

Here's a little something I've been working on. As usual I'd like you polycounters to test it out

There's numerous tool out there in various package that allow you to transfer maps from one UV set to another. Unfortunately it never works with normal map and you end up rebaking most of the time.

I developed that simple technique that will allow you - just using your lowpoly and a normal map - to transform that normal map from/into world space normal map, rotate and distort UVs, all in realtime.

You can download the shader there: http://www.mentalwarp.com/~brice/download/NormalMapEdit.cgfx

and have a look at this short tutorial:

The main problem is that the output has no padding, but you can easily recreate those using common photoshop scripts (xNormal has one right?) or even the warp image itself in maya.

If you only need to convert a normal from world space to tangent or the opposite you just need to link twice the same vertex data

The shader alternatively allow you to use the normal map with no initial UVs but to generate them on the fly using a tri-planar projection. Especially useful to bake a tiling pattern all over a complex shape.

I used Dmitriy Parkin's awesome model for DWIII, thanks to him you can dl on his website

http://www.parkparkin.com/personal_art/imrod.htm

Enjoy ! and feedback please

Replies

- and would this .cgfx work in max?

So yes basically. I could transfer regular color maps as well, but there's a bunch of tools that does that pretty well already. The trick with normal maps is that unlike color maps they're dependent on the orientation of the UV shell layout, that layout that we'd often like to change without going trough the whole baking process again.

You might not always have that luxury either, like porting old assets or an outsourcing going horribly bad ^^

The trick with maya is that hardware rendering is done on the gpu. It allows you to render super-sampled cgfx as they would look in the viewport at a fixed size (1024, etc). If max can render hlsl offline or precisely fix the size of the viewport then it should not be a problem. You could easily create the same shader in shaderFX I think. v3 allows you to do vertex shader which is all you need to project the mesh into UV space.

cheers

i was just looking into baking a map from a real time shader.

i was wanting to blend multiple normal maps with vertex colors and bake the final result. how exactly are you rendering the uv space with hardware rendering?

http://img.photobucket.com/albums/v242/pior_ubb/normalsedit-shader_error.jpg

Will try it tomorrow on more up-to-date hardware and software!

@arshlevon

funny you have the same idea, i initially wrote the shader for the auto unwrap on a job with variations of rocks. i used the vertex color to control the bump scale and the blend between two tiles. would that be interesting to publish? maybe more maps available would be nicer.

@pior same problem as here:

http://boards.polycount.net/showthread.php?p=898414#post898414

with:

float4 Position : POSITION;

float3 Normal : NORMAL;

float2 SourceUV : TEXCOORD0;

float2 DestUV : TEXCOORD1;

float3 SourceTgt : TEXCOORD2;

float3 SourceBtg : TEXCOORD3;

float3 DestTgt : TEXCOORD4;

float3 DestBtg : TEXCOORD5;