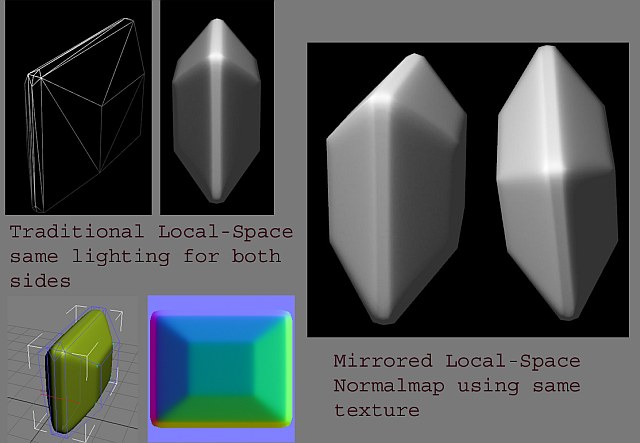

mirrored local space normalmaps

just a little proof that it works

3dsmax exporter (as well as converter from other formats) of next luxinia release will support generating the necessary per-vertex attributes.

I also intend to make a little tutorial about it + showing that it can work with deforming meshes, too. though it will take a while until then.

the mirroring works on any arbitrary axis, ie you dont have to use major axis. The only restriction I am currently aware of is, that making two-sided geometry is illegal (ie I need a bit of volume between sides to detect the mirror plane).

Mirrored parts must lie outside 0-1 and must be shifted by multiples of 1. So that the fractional UV part ( what is after the comma) is still the same as the reference side. This restriction is only because I wanted it to work from any input format, if the technique was directly wired to the dcc exporter where more information about triangle connection and so on exists, another method for marking "outsiders" could be used, but I think this works fine. Afterwards outsiders are actually moved back to 0-1.

runtime performance costs are slightly higher than straight local maps, but less than tangent.

As for which format compresses better / has less artefacts on compression (tangent vs local), I dont have proper source art and so on at hands (too little experience on the whole baking stuff). In theory however there are more texture formats for tangent compression I think.

I'll bump this thread again once the release and more info is out

edit:

http://developer.nvidia.com/object/real-time-normal-map-dxt-compression.html

probably the best article about compressing normalmaps, also hints towards better possibilities with tangents.

Replies

The way I understand the compression issues...

1. With a tangentspace map you can disregard the blue channel (calculating it in the pixelshader), so you only have to store red and green. If you want lossless 8bit per channel, then it's only two channels vs. the three for objectspace.

2. DXT compression of a tangentspace map without the blue channel yields less artifacts because it takes advantage of the fact that the green and alpha channels have higher precision. Also the compressors do better if red and blue are the same uniform value (per 4x4 chunk, a separate oddity that could be exploited!) so basically for the best results you want to store only two channels of data, thus tangentspace wins over objectspace if using DXT.

is the only reason they dont use it the mirroring thing or other stuff ?

in those charts the "average" signal noise ratio is better for tangents (but also has a few where it's worse than object/local space).

What benchmark are you guys defining for performance, PC or consol?

Earth: The tool you're refering to is a method to layer or combine tangent space detail maps on object space normal maps? or do you just use it in your 3d package to amke sure the nothing is upsidedown and then just bake it across to be unique rather then tiling in the in game material?

In what game context are you working in, FPS? ( not that this is too significant, I'm just curious)

Of course when shading is more complex and more resources are spent, the hit of changing between each might be very tiny.

Afaik the game EQ works on had support for mirroring for a while. I now have found time to update tools for luxinia as well (which isnt used commercially for games stuff atm), I've mentioned the idea for mirroring them last year I think in this forum as well. The math involved is really simple, which surprises me that its used so rarely, but I would blame better compression support for tangent stuff.

edit:

should add that "compression" does not only have the benefit of less memory, but (which is much more important) means faster texture sampling. which does speak for tangentspace and 3dc texture compression

got some time to work on viewport shaders for max again, and also worked on script to generate the needed per-vertex data. Yeah I know last time I teased a tutorial and didn't deliver, but this time will provide the shader and tools and integrated into 3dsmax.

Also I was curious for any info you had about using a localspace map with deforming meshes.

the algorithm is really straightforward:

- you compare uv chunks (inside and outside 0-1 box) and compute the mirror normal for corresponding uv verts. that you do in preparation step and store per-vertex.

- on run-time at vertex level, you pass that mirror normal (n) (0,0,0 for stuff that isn't mirrored) and good old reflection math will then either reflect the texture normal (v) or not. No special care must be taken as passing 0,0,0 will simply not change the input vector. (reflect = v - (2 v dot n) n)

assuming world space lighting, deformation would be analog to tangent space (in TS you deform the TS vectors before passing to pixel stage). But now you pass the deformation matrix (without translation) directly and run them on the flipped normal.

Will pass this along to our rendering programmer. We're doing normalmapping on the Wii, pretty amazing it can actually do it since it doesn't use pixel shaders at all.

We're leaning towards a mix of dot3 normal mapping and embm mapping, since embm can support both object and tangent maps. Of course tangent maps compress better, can tile, are easier to edit, etc. But... embm only uses an environment map for lighting, so you either accept static lighting, or you have to render the env map dynamically. We'll see...

BTW, I found the Nintendo patent for EMBM, an interesting read.