[Pipeline] Substance Designer cliffs and rocks to in-game assets.

Hi there, this will be more of an open topic, and I hope I will get some insight from people experienced in this topic.

This topic is on the subject of the pipeline you would use to translate a cliff made in Substance Designer into an optimized asset to use in a real game production environment.

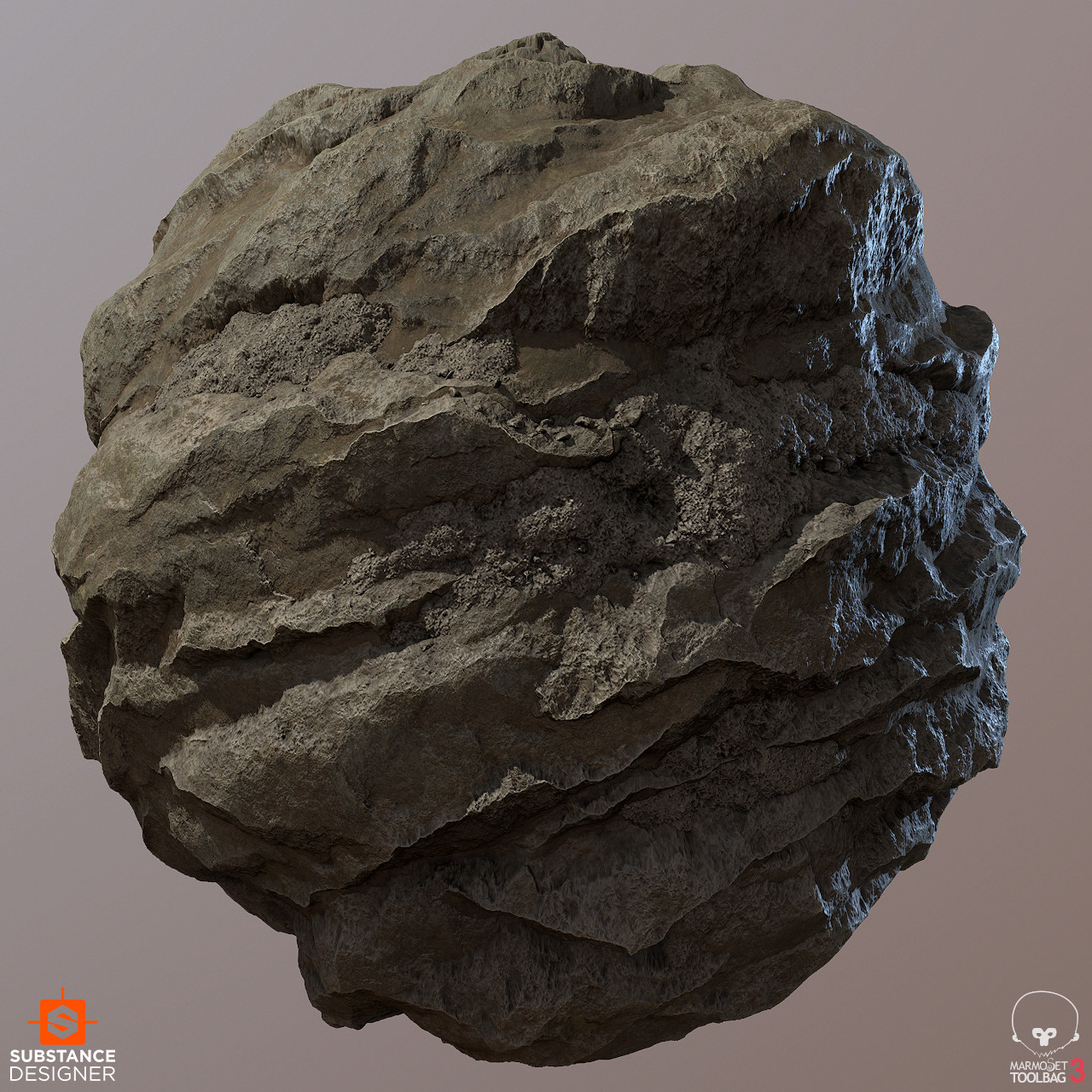

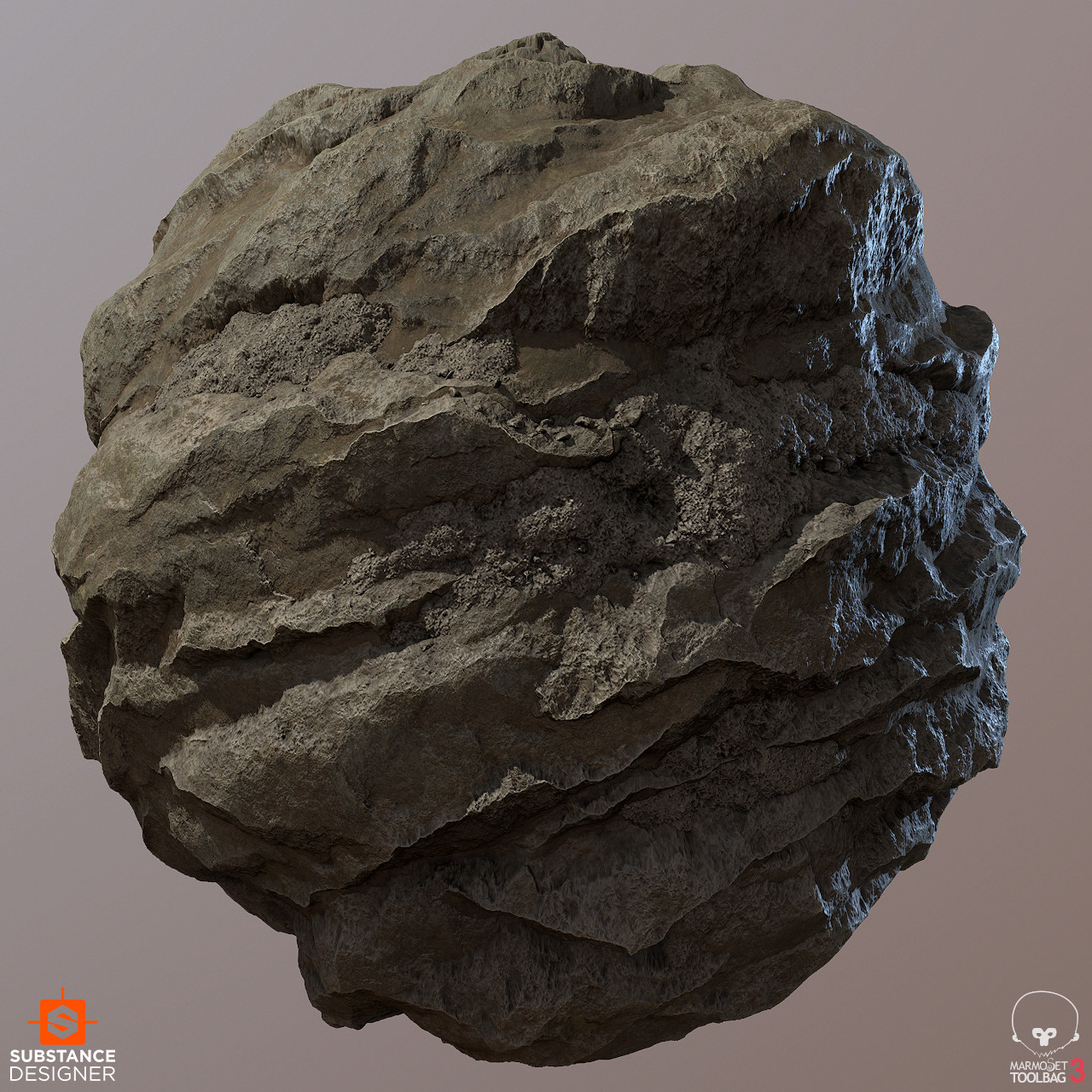

Here is the thing: I keep seeing those great substance designer screenshots of great procedural cliffs and rock surfaces:

(The 4 last ones are all from Daniel Thiger - https://www.artstation.com/dete)

What those Substances have in common is that they are relying on heavy tessellation to look good, so here comes the problem:

While they are great for offline rendering and still pictures, I don't imagine that you can use it in a game-engine, applied on a simple geometry with real-time tessellation shader to render those assets:

*Just like Substance tesselation, any real time tesselation will be highly unoptimized and will need to be pushed to a really high density to produce satisfying render.

*Since it has a really strong effect on the silhouette, this tesselation will need to be set to fade at a quite high distance, making it even worse.

A single of those cliff assets would generate a really dense geometry and I would not consider it safe to have it duplicated many times in the scene.

So my question is here: Has anyone figured a smart pipeline to use similar substances in a game engine, avoiding relying to intensely on tesselation for the silhouette of the asset (So tessellation can be faded out at a reasonable distance). And preferably in a way that avoids the need for unique modified texture sets for each variation of the cliff asset.

Maybe by applying that heightmap vertex offset on the source geometry, but then you would probably can't use the substance normal map anymore, as it would be added to the vertex normals of the geometry. Maybe then projecting the source mesh normals onto the modified geometry to cancel the vertex normals offset? A process like this could be automated through Houdini.

What are your thoughts, have you experimented solutions for that problem?

This topic is on the subject of the pipeline you would use to translate a cliff made in Substance Designer into an optimized asset to use in a real game production environment.

Here is the thing: I keep seeing those great substance designer screenshots of great procedural cliffs and rock surfaces:

(The 4 last ones are all from Daniel Thiger - https://www.artstation.com/dete)

What those Substances have in common is that they are relying on heavy tessellation to look good, so here comes the problem:

While they are great for offline rendering and still pictures, I don't imagine that you can use it in a game-engine, applied on a simple geometry with real-time tessellation shader to render those assets:

*Just like Substance tesselation, any real time tesselation will be highly unoptimized and will need to be pushed to a really high density to produce satisfying render.

*Since it has a really strong effect on the silhouette, this tesselation will need to be set to fade at a quite high distance, making it even worse.

A single of those cliff assets would generate a really dense geometry and I would not consider it safe to have it duplicated many times in the scene.

So my question is here: Has anyone figured a smart pipeline to use similar substances in a game engine, avoiding relying to intensely on tesselation for the silhouette of the asset (So tessellation can be faded out at a reasonable distance). And preferably in a way that avoids the need for unique modified texture sets for each variation of the cliff asset.

Maybe by applying that heightmap vertex offset on the source geometry, but then you would probably can't use the substance normal map anymore, as it would be added to the vertex normals of the geometry. Maybe then projecting the source mesh normals onto the modified geometry to cancel the vertex normals offset? A process like this could be automated through Houdini.

What are your thoughts, have you experimented solutions for that problem?

Replies

Seems like it'd be similar to the workflow some hand painted artists use when they paint first and then model second.

Realistically for most games on the current generation you simply can't do it and these fancy portfolio shots bear little to fuck all resemblance to in-game textures.

Joshua Lynch:

DThiger :

Yes, but if you do that, you don't have generic/tiling textures anymore, which is one of the biggest strength of substances. With that, every cliff asset become has it's own texture set, and if you have 5 of them, that's 5x the whole texture set in memory. A good pipeline to use them would mean: Zero bake, no new textures, we just use the tiling substance generated textures, but working on many set of geometry.

Yes, I agree...

I was thinking of a similar - Houdini based - solution. I would love to give it a try.

If we were to make a similar tool, that decimates the mesh based on the displacement maps, and generates lods and all, the normal map would not be usable anymore as it would be added on top of the decimated mesh and its vertex normals, so my guess is that we would need to, after decimation and the displacement are applied, then transfer the vertex normals from the source geometry onto the generated geometry.

I am wondering if this would be sufficient. What do you think ?

If it does, a tool that does that, and also support a blending of 3-4 different textures, could be really great.

-One high frequency detail, used to generate the normal map (and eventually the realtime displacement map used with tessellation).

-One low frequency, which are more the large shapes of the rock/cliff, used only for the offline displacement.

You don't need to worry about normals etc.

What I mean is to displace geometry using on a heightmap to form the big shapes and then apply what is effectively an unrelated tiling material to it.

This is I think also what DThiger is saying he does.

As a rule of thumb the heavily displaced material ball stuff you see all over Artstation is utterly useless in production (it also wont get anyone a job unless it does something super clever)

and the rest:

I've used texture derived geometry for all sorts of things - as have many people I've encountered in many places. It's almost always cheaper to render than displacement/POM so it's quite a common thing to need to do.

You can dig into it deeper (deal with funny shaped meshes, automate LOD chain creation etc.) but fundamentally it all boils down to what you've already done in blender.

The problem I've found with man-made surfaces is that you tend to need a disproportionate amount of geometry to feed your decimation algorithm in order to get a good shape at the end so it can get pretty clunky to work with.

To the best of my knowledge this isn't a problem that's really been tackled academically - I've got a couple of ideas on how to generate a semi efficient mesh in one pass but haven't dug into actually doing it yet.

Layer the information at different frequencies (tile rates/whatever you want to call it) and you break repeating patterns.

So first you create "base"materials in SD. Then apply them in SP to your model and randomize & blend them as needed.

Finally, export the displaced mesh from SP. For right now, we use the UE4 LOD tools to get the resulting mesh to acceptable polycount.

The hope is, that UE5 + Nanite will just automatically scale the resulting mesh to the required polycount.

IMHO displacement based modelling is the future for creating UE5 ready models efficiently.

So I would be very interested in continuing this discussion!

I would definitely also be open to develop some tools together for such a workflow, like some people mentioned earlier in this thread.

That hunger of Nanite for high res geometry has to be fed somehow.

And manually sculpting 100 rock variations is just too slow and tedious at that detail level.

(Although we still sculpt the rough base shapes of the rocks in Zbrush, but that can be done in a few minutes per rock.)