Help needed with Perceived Pixel Density shader

Hey guys!

I am probably in the wrong forum for this but I have no idea where to look for the answer to my question. Ok, so I'm trying visualise the perceivable deficiency or flooding of pixels in a 3D scene. What this means in layman's terms is that I want to visually see where I've used too little or too many pixels within a 3D scene.

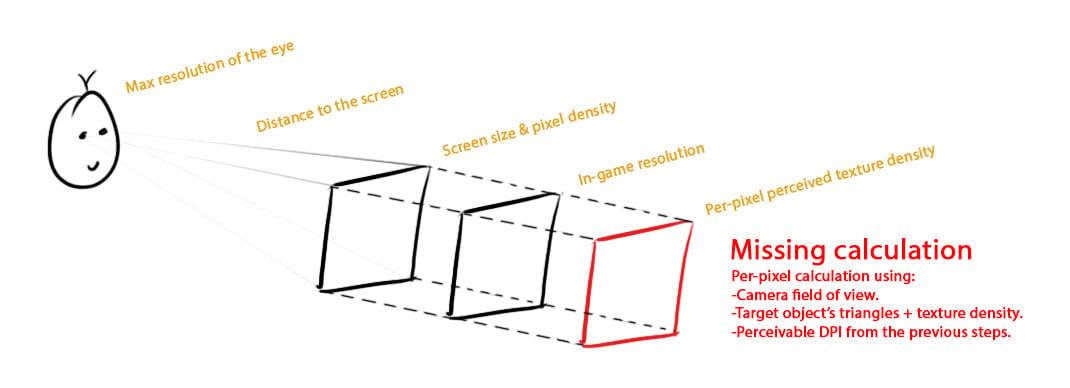

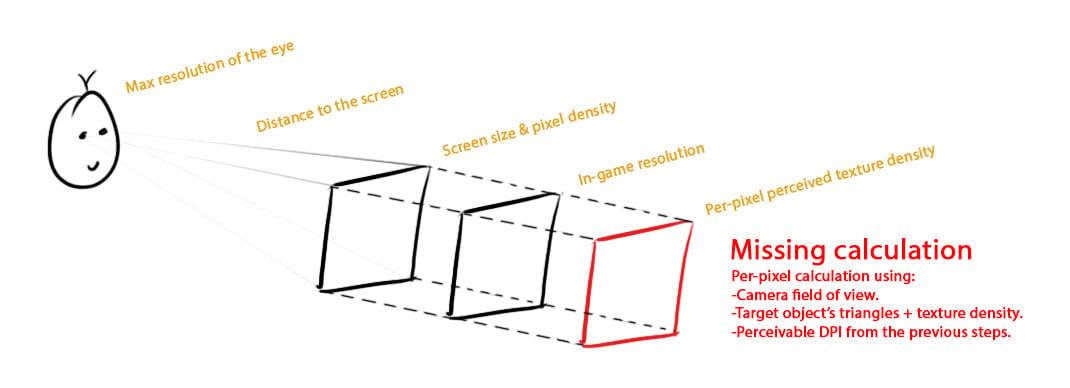

I created the following drawing as to illustrate the many factors involved:

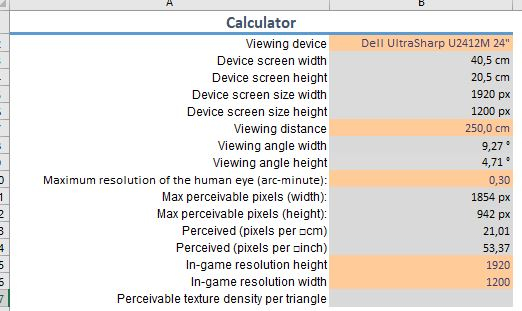

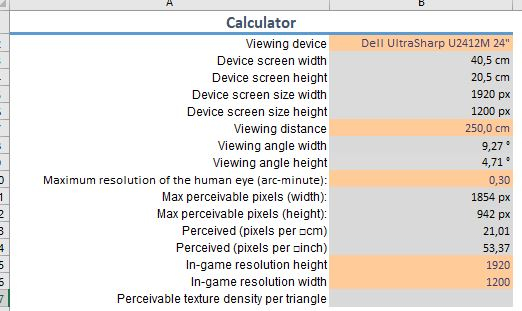

The calculator:

To get through the hurdles illustrated above I've created a calculator. This calculator takes all non-game factors into account and calculates all variables I need for the end-point. But here is where I am stuck. I need to do a per-pixel calculation and shading but have done neither of these things before.

Is there anyone who can send me in the right direction for this last section?

Or if someone else has already made a tool/shader that does this?

I am probably in the wrong forum for this but I have no idea where to look for the answer to my question. Ok, so I'm trying visualise the perceivable deficiency or flooding of pixels in a 3D scene. What this means in layman's terms is that I want to visually see where I've used too little or too many pixels within a 3D scene.

I created the following drawing as to illustrate the many factors involved:

The calculator:

To get through the hurdles illustrated above I've created a calculator. This calculator takes all non-game factors into account and calculates all variables I need for the end-point. But here is where I am stuck. I need to do a per-pixel calculation and shading but have done neither of these things before.

Is there anyone who can send me in the right direction for this last section?

Or if someone else has already made a tool/shader that does this?

Replies

https://docs.unity3d.com/Manual/OptimizingGraphicsPerformance.html

Are you writing your own renderer?

Edit: your 3D scene doesn't know about pixels, only the rasteriser does. As I understand it, changing the camera FOV does not change the "pixel density" of the screen since, during the rendering process, after all meshes are projected on the screen, they are mapped from (normalised) world units to screen-pixel units. This is called "viewport transform":

- https://msdn.microsoft.com/en-us/library/windows/desktop/ee418867(v=vs.85).aspx#overview

- https://fgiesen.wordpress.com/2011/07/05/a-trip-through-the-graphics-pipeline-2011-part-5/ (search for "viewport transform")

- https://www.scratchapixel.com/lessons/3d-basic-rendering/computing-pixel-coordinates-of-3d-point/mathematics-computing-2d-coordinates-of-3d-points (search for "NDC")

Sorry if I use the wrong terminology. I'm still figuring this out so I might be off on things.

@Eric Chadwick Well I'm not writing for a particular engine since I haven't started building yet. But it will most likely be Unity since I'm most familiar with that one. The link you showed me unfortunately does not fix my problem.

@RN The image you showed me is close to where I'm going for but it attacks the wrong problem.

I'll introduce myself shortly so you know how and why I am approaching the problem in the way that I am. I'm an Asset Store Publisher so I create assets for various purposes and devices. And I'm creating a pipeline for asset production that makes assets for all possible platforms and all possible usages. To do this I will need to define the highest possible detail so that I can work my way down to the lowest possible detail.

Factors

The 2 factors that mainly define detail in-game are: Mesh density & Texture resolution.Mesh density

Highest point: Where adding more triangles does not alter the perceived internal and external silhouette of the object.

Texture resolution

This is split into 2 parts.

Highest point: Maximum perceivable resolution by the eye in relation the device's physical size, viewing distance and DPI.

Highest point: Defined by the object's usage which in turn determines how large the object is drawn. (is it a wieldable weapon, environment prop in the background etc.)

End-Goal

The practical goal would be to create an in-game interface on the asset where the customer can select how the object is used/distance to the camera. The interface will then automatically select the right detail in both the mesh density and the texture size.What I need to do to make this possible

-I need to define what the most general in-game usages for objects are and which min & max distances belong to those. These will define benchmarks for how detailed an object can be at its minimum and maximum. In some cases this will be infinite since a player can put the object in his/her face, aka wieldable items in VR.

-Create a tool which validates a 3D scene using the above specified data. This is where I am stuck.

**I have an idea of how this would have to look. When I have time in the upcoming days I will photoshop an in-game screenshot.**

- https://developer.nvidia.com/content/dynamic-hardware-tessellation-basics (search for "Choosing the tessellation factor", it's close to what you want)

- http://www.hugi.scene.org/online/coding/hugi 13 - colod.htm

First of all thanks for thinking along. I just tested my theory using your sources in 3Ds and I found out why what I was trying can't be found on the internet. And it's not because it can't be done but because nobody needs it and neither do I. Apparently I've been approaching the problem in the wrong way.

- For determining the mesh density I only need to know how much it will ever fill the screen. So now it is just a matter of determining the common usages and add camera's for the distances that belong to that usage. I can then use the camera's to render a black and white image to see the silhouette. This can be build with a simple rig in 3Ds Max.

- For the texture density I don't know yet but it can easily be edited since all the textures are created in Substance Designer so even if that problem is not fixed it is only a minor problem.

I'm not sure if this will be of anyone's interest but I might share the 3Ds max scene when it is thoroughly tested.Again, thanks for your response!