The Procedural Approach. Halo Wars 2 and Substance Designer

Representatives from the Creative Assembly reached out to us and asked if they could share their experiences with the Substance Designer tool suite with the community. The following article is their break down of how and why they use the technology in their art pipelines at their studio.

My Name is David Foss. I'm from Norway, and I’ve had the pleasure of working with the fantastic crew at Creative Assembly on the console team in Horsham, UK, for over 5 years now. It's been a true adventure, shipping Alien: Isolation two years ago, and now releasing Halo Wars 2.

Learning the dynamics and intricacies of big scale productions like these has been very interesting, and I'm pretty sure no studio has really perfected it yet, and probably never will. Time and resources are lost through iteration, non-synchronised schedules, going back on decisions and scope changes all of the time. And that's fine. It's the nature of the creative process and the fact that launching an AAA game is almost like launching a space shuttle. We aren't setting up a factory that produces a million identical consumer products, so there are limitations to how automated the process can be. And in fact, with creativity being key to what we do, we wouldn’t want to restrict that through too much process.

Saying that, every time you find a way to intelligently automate something in a production and pipeline friendly way, it's a big win. And we found a big win with Substance Designer.

It was during the start of pre-production for Halo Wars 2 that I started learning Substance painter and Designer. We had just shipped Alien: Isolation and I had some time for exploring and learning new tools.

I was immediately impressed with Painter, but it wasn't before I started getting comfortable with Designer that the bell rang loudly; this could fit into a production pipeline really well! I’ve played around with Nuke, Fusion and Maya shaders a lot, and I love the node based workflow, so I immediately got hooked on Designer.

Traditionally, texturing is a time consuming task done in Photoshop, with many layers and individual workflows for the different texture maps like Diffuse, normal, specular etc. There are now other packages that allow for painting directly on the 3d object of course, but what interested me in Substance Designer was the fully procedural way of working, allowing you to create a material and apply it to an infinite amount of assets.

I was quickly able to produce PBR texture sets for tile-able terrain textures and mesh driven texture sets for rocks and cliffs, and soon my colleagues and lead were starting to take an interest. It was particularly easy to get the rest of my team on board, because I could make on the fly changes and variations to multiple assets in very few seconds.

Adopting this workflow of creating textures in Substance Designer was surprisingly easy in the environment art team, and we soon had quite a few substance designer masters doing a lot of interesting complex materials. It certainly helped that the indie licence was priced in such a way that people could learn while at work, but also outside of the studio, trying new things in their own time.

Having made these materials, and wanting to apply them to many assets, we soon found that most of the time was spent on the logistics:

Baking mesh maps

Creating a new substance graph

Instancing the material into it

Connecting the mesh maps to the inputs

Connecting output nodes for the rendered maps to the instanced material

Rendering the textures to the correct place in the folder structure

Working with a colleague, we started brainstorming on the possibilities of automating this by using the existing Substance batch tools. The data needed to do this was all there, it just needed to be scripted somehow.

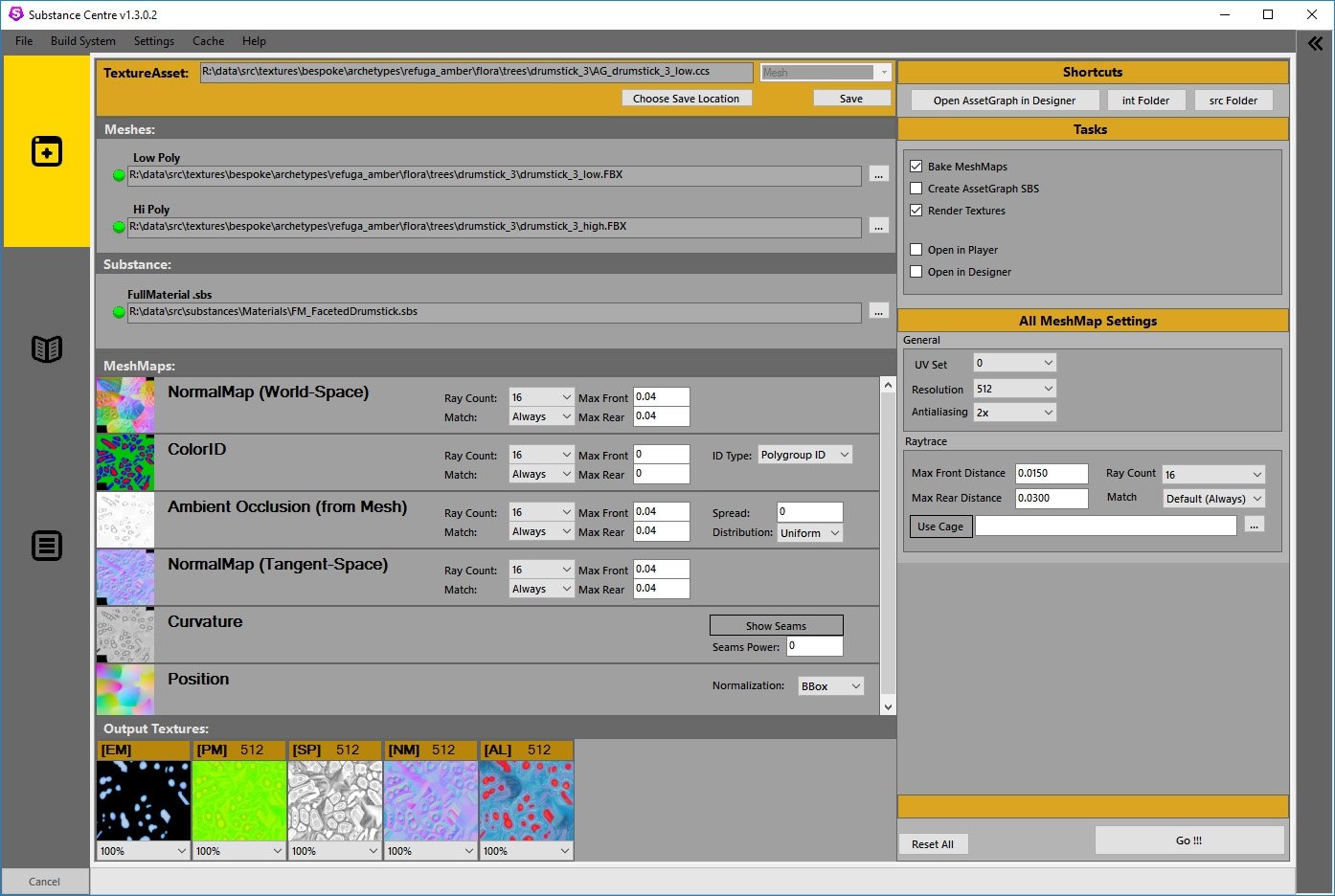

This is how Creative Assembly’s in-house tool; Substance Centre, came to be. We did joke about naming it Substance Abuse, but fortunately, common sense prevailed! The UI had a few iterations, and it will probably get a few more iterations before everyone is satisfied.

We found quite early on that we needed to decide on a system and terminology.

To create a texture asset, all you need to do is drag and drop files for low poly, high poly and your full material into the Substance Centre slots, and it will figure out the rest.

It reads what types of maps it needs to bake, and what outputs there are to render and it hooks it all up in the "asset graph".

It allows you to change the resolution and downscale each specific rendered texture for optimisation.

It stores all the information used to create each texture asset in an xml files, which in turn is used to maintain a database for materials and texture assets. This also allows us to keep track of dependencies.

Dependency tracking allows you to find all texture assets using a given material, or even all materials using a substance tool you have instanced. It will populate a batch list for you, ready to re-render on your command.

Basically, it has become the one stop place for creating, editing and rendering textures with full perforce integration.

Imagine a scenario where you have a material graph that is used for 10 different rocks and cliffs texture assets, but it's also instanced into a tile-able material graph that creates sand dunes with rock debris in it. It's even used inside a material graph that produces rock decals with alpha for use on top of the terrain. Let us say that this all totals 20 full sets of textures, Diffuse, Normal, Spec, gloss, alpha etc.

Then…. your lead or art director comes along and requests changes to the colour of the rocks in the game.

Imagine what this would mean if you created all of these textures in Photoshop.

You would have to go through all of those Photoshop psd's individually and save out all of those files to the correct paths.

Now imagine a world where all you need to do is edit the original material graph, and hit two buttons inside Substance Centre.

Substance Centre finds all the texture assets that depend on your graph, checks them out in perforce for you, queues the re-rendering of them all, and puts all the files in all the correct folders for you.

You would be able to show the new results in-game in five minutes, and four of those minutes would be spent on doing something else.

That's how you make art directors and producers happy.

I firmly believe the procedural approach is the way to deliver the volume and quality of content the consumer expects while keeping production costs down.

Replies

Thanks for sharing this David!

edit: to clarify, the stuff outside of authoring designer graphs is what I was responsible for, and the art team went way further than I thought they would with implementing awesome procedural tools in the graphs

edit2: I felt really bad all day not remembering who was the 3rd artist who also gave loads of important and useful feedback to build the pipeline and tools. But it was @PogoP

welp back to my real life where I don't have this neat tool. :v

Ben

I am trying to make Substance Designer my main texture tool for years , It's definitely has a lot of advantages but imo still too alien and clunky even for regular node based editor.

Through years I transfered a lot of my Substance Designer experience and ideas to Photoshop work-flow and found surprisingly that I could use pretty much same approach there too plus a lot of traditional and much more convenient image editing tools. Even curve editor and gradient map recoloring have so many nice and convenient options over SD, so well designed out of box.

Imo absence of node bypass and saved curve/gradient map/etc presets in SD makes it much harder to tweak subtle nuances so important for true realism. When it's a few clicks in Photoshop , it's a Gordian knot of connection lines in SD.

I am dreaming about a kind of hybrid in between PS, vector tools and SD, half nodes, half layers/objects based.

Which coding language did you use to write this tool? pyQT?