Normal map compression and channel packing

This is a cross-post from this thread: http://polycount.com/discussion/184004/normal-map-compression

I decided to post this here as well as in the Unreal Engine sub-forum, since I figured that this doesn't necessarily have to be Unreal Engine exclusively (and I'm hoping that more people will see this and help out if I spam a little). I'm too stubborn to not resolve this.

I'll give the short version of the other thread here; I'm trying to pack my textures like this:

Base Color in RGB, height in Alpha.

Normal map in RG, AO in B and roughness in Alpha.

I'm doing this to save texture memory-space - etc-etc.

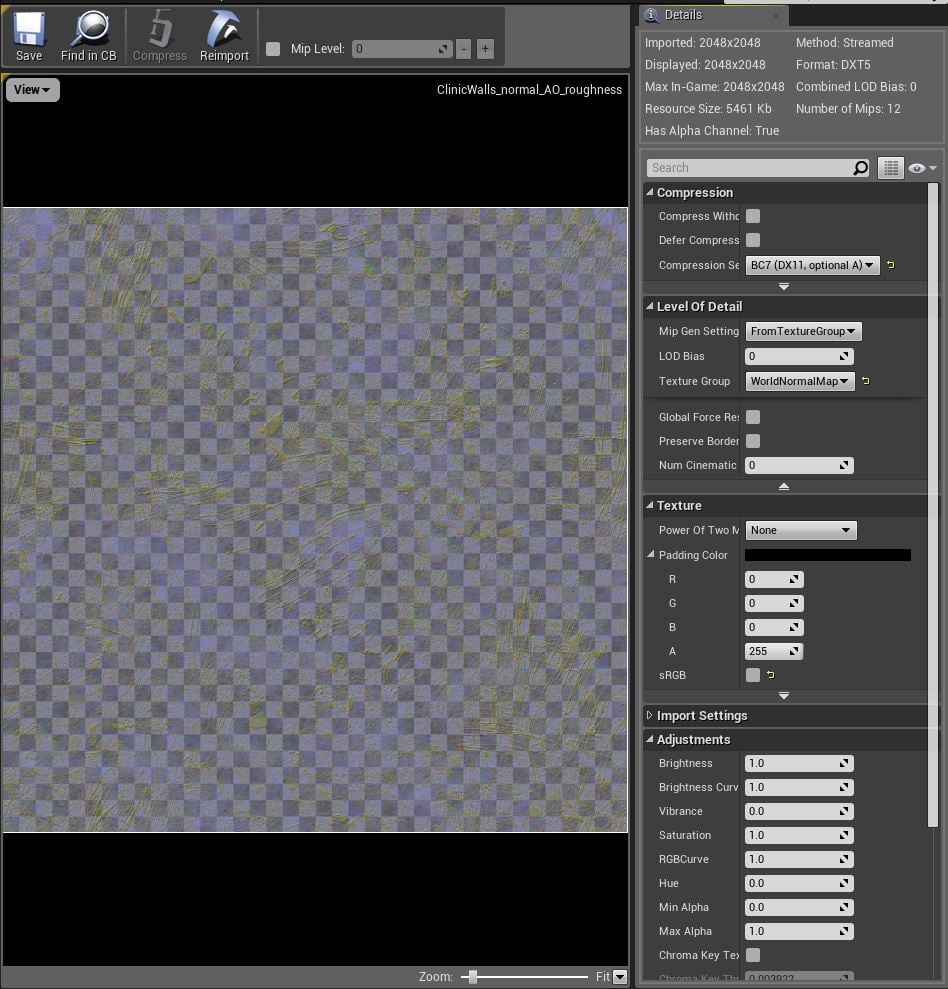

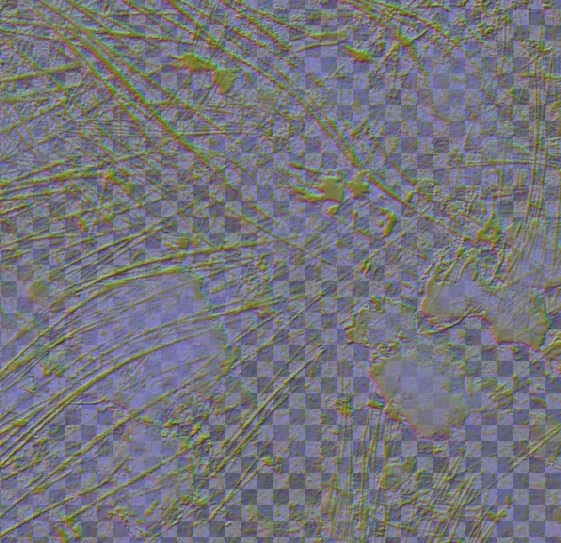

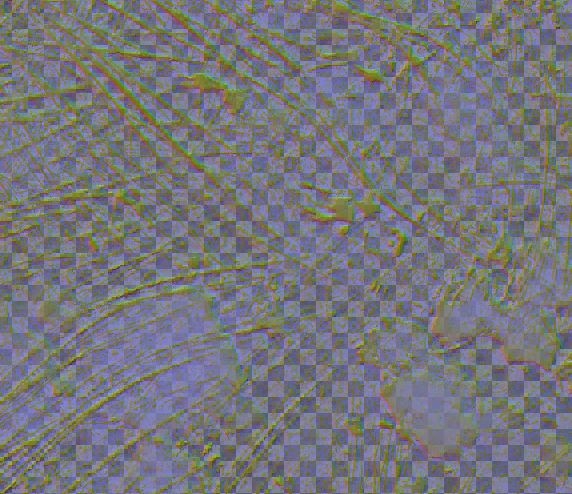

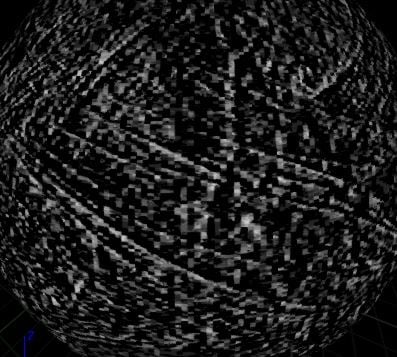

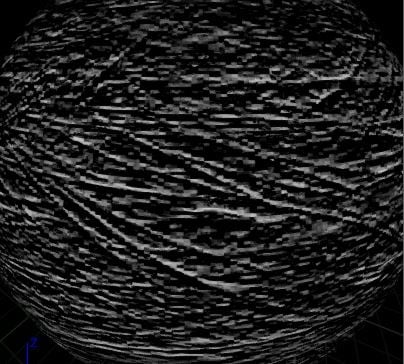

I've seen this done correctly by others in UE4. But I can't seem to get it to work without a major visual down-grade. When I compress my normal map in Unreal Engine 4, using the BC7 compression setting (or the DXT1/5, BC1/3 setting, for that matter) the R and G channel (where the normal map exists) gets shafted extremely hard. The first sample here is the uncompressed texture, viewed in UE4 and the second one is the compressed version.

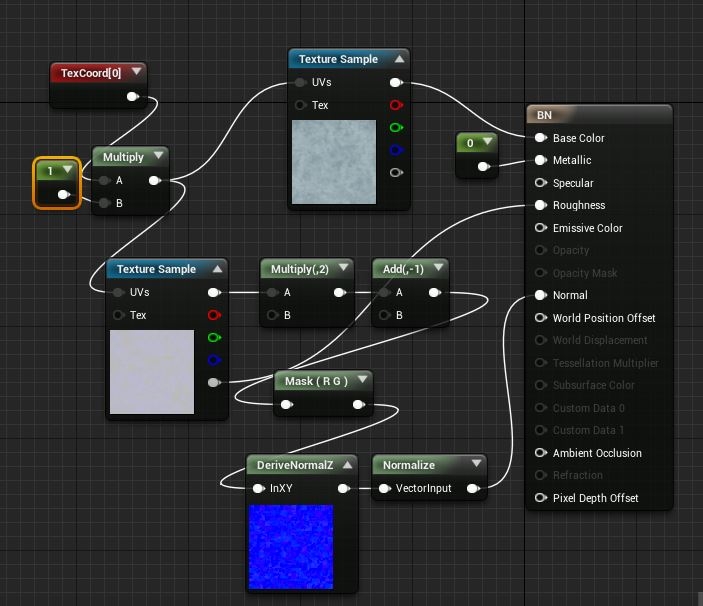

Material:

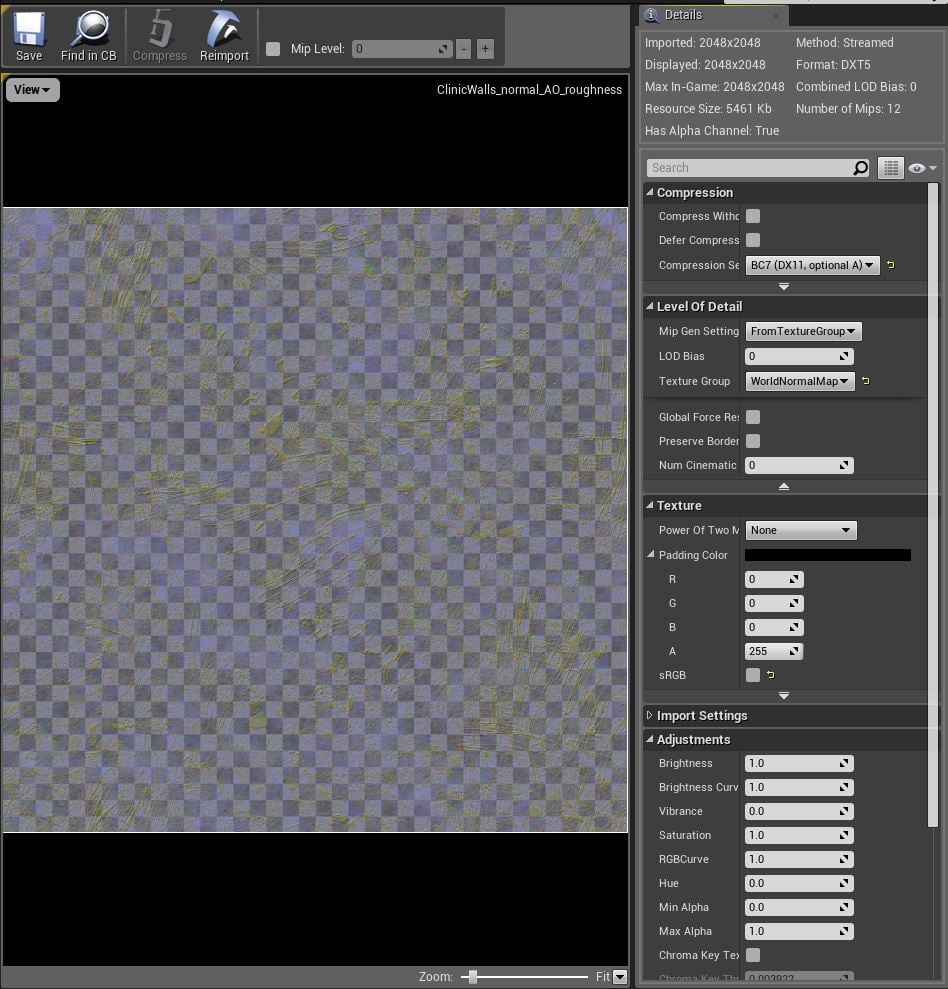

Texture:

For a more extensive explanation of the issue, please visit my other thread in the unreal engine sub-forum! http://polycount.com/discussion/184004/normal-map-compression

EDIT: I'm including the full explanation from the other thread here as well, to avoid misinformation or lack of information:

Intro:

I've already spent 2 days trying to understand

this and lost sleep over it. I have read through the relevant (and more)

polycount wiki articles, unreal engine documentation, plenty of forum

threads both here and on the unreal forum and I've also read up on

texture compression in general. But I feel like there's something I'm

missing that I just can't figure out by myself. So I'm turning to you

guys for help.

Goal:

I'm trying to pack my textures in a smart way, so that I'll only need to load 2 textures for each of my materials:

-Base Color in RGB and height in A.

-Normal map in RG, AO in B and roughness in A.

Background:

I'm sure many of you have seen this done correctly before. I've seen this done correctly

before (here is an example of someone who has done it correctly before:

http://polycount.com/discussion/comment/2521188/#Comment_2521188). I just don't know what I'm doing wrong.

I packed the channels in Substance Designer (I've tried exporting with both default format and DXT5, if that's even relevant) and double checked and controlled that the channel packing is correct in Photoshop with both png and tga files. - With that said, I don't think there's anything wrong with the packing.

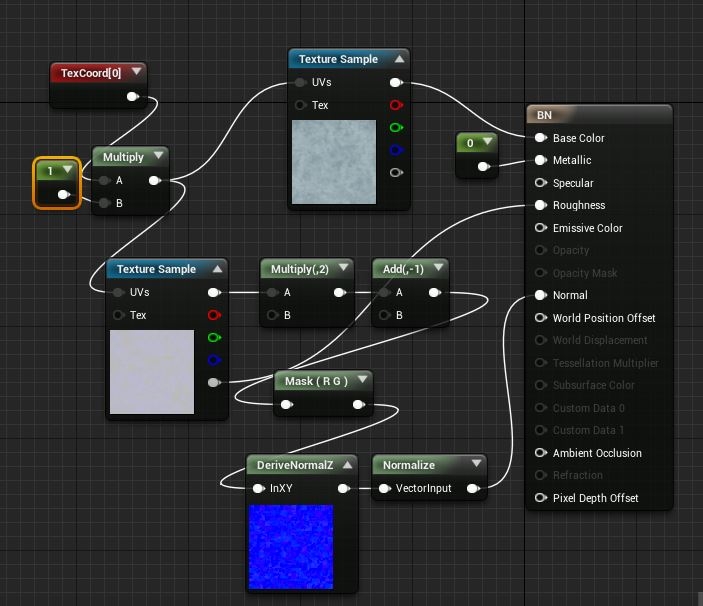

In Unreal, I use this shader and these compression settings:

The normal/AO/roughness texture is set as "Sample Type: Linear Color" (as I get error messages otherwise).

As far as I know, this should be right and should do the trick. Side-note: I don't find there to be any difference between the BC7 (which I've been told to use) and the default setting (DXT1/5,BC1/3). The HDRCompression setting (BC6) renders a better result but also shoots the resource size through the roof.

However, I quickly noticed that this was only the case after I saved/compressed the texture within Unreal (the uncompressed file, right after import, renders great results, almost indistinguishable from how it looks without packed textures):

The first image shows what the texture should look like (screen shot taken with non-packed textures) and the second shows what the texture looks like (screen shot taken with packed textures with the given shader above).

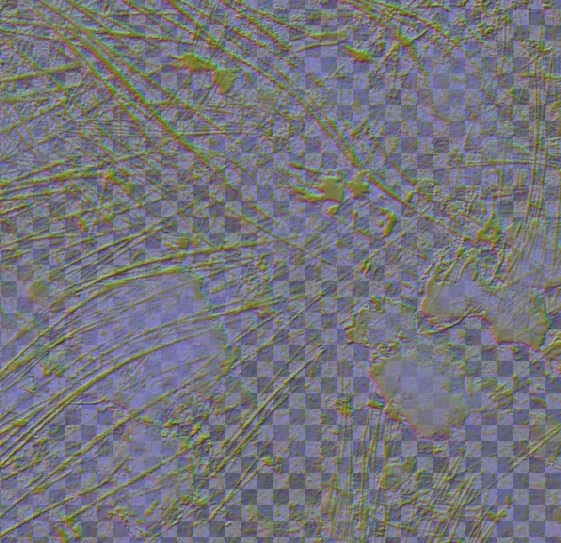

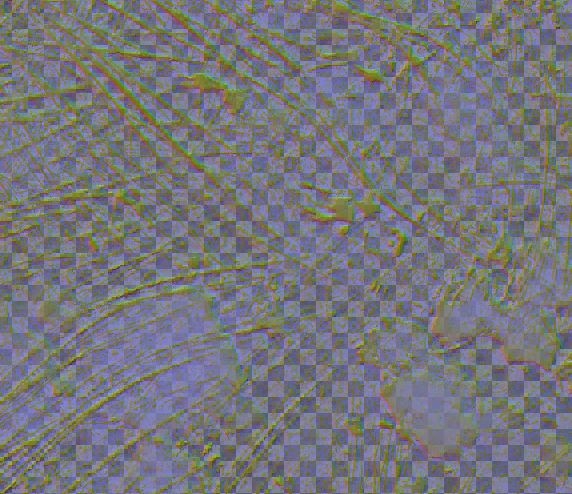

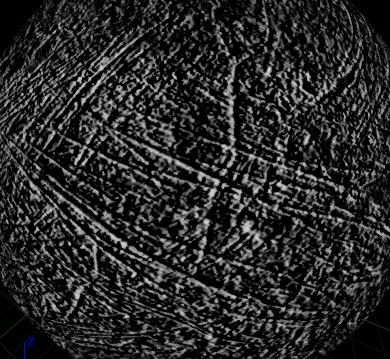

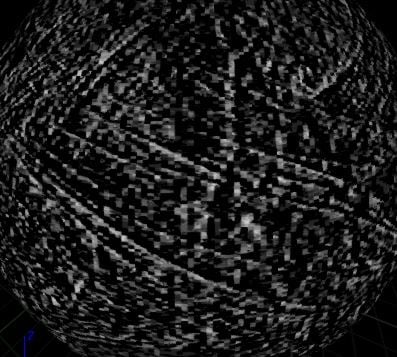

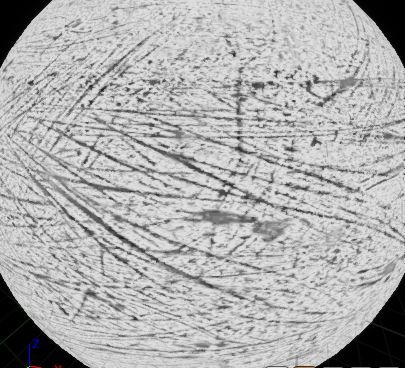

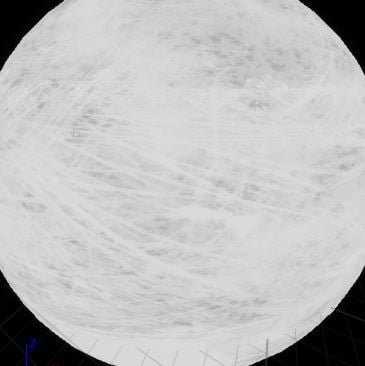

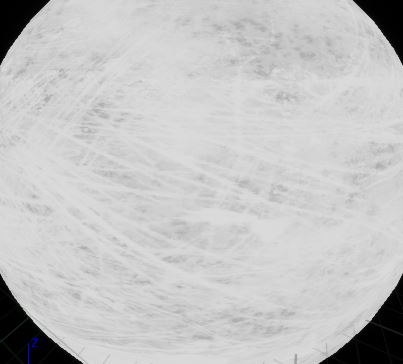

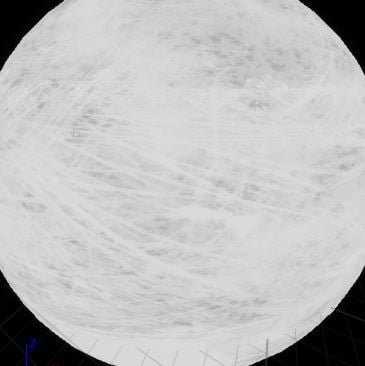

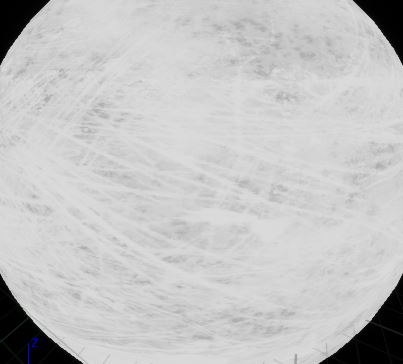

Here are samples of the packed normal map, uncompressed (the first one) and compressed (the second one, using BC7 compression):

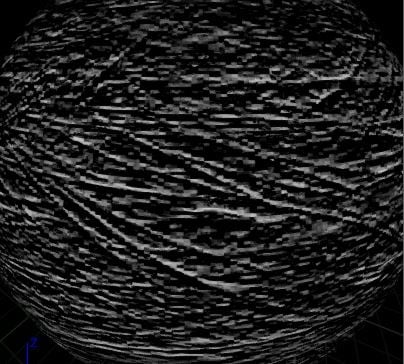

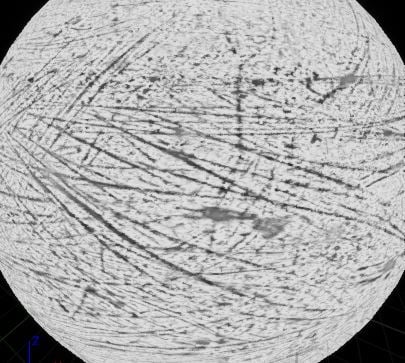

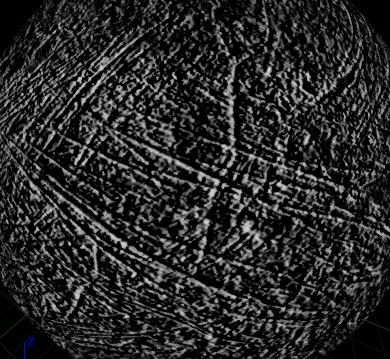

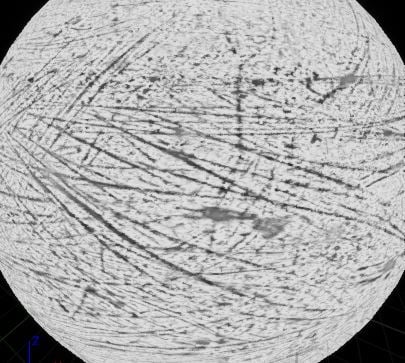

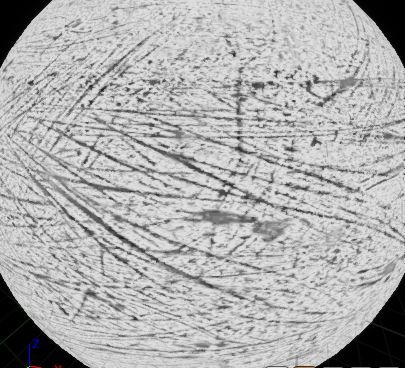

I further tracked this to the R and G channel being the issue. Here are some samples of each of the four channels. The first one is "how it should look" (what it looks like in the non-packed textures) and to the second one is what it looks like (in the packed textures). Please note: The image showing the packed channel (the second one) is at the point in the shader after I multiply the channel by 2 and add -1.

R:

G:

B:

A:

As we can see, the issue appears in the R and the G channel (only in the normal/AO/roughness map, not in the base color + height map, which uses the same compression with the exception that it's checked as sRGB and is set to "Sample Type: Color" instead of "Sample Type: Linear Color" in the shader). I don't know what is happening here, if the channels are for some reason rendered at a lower size than they should, if they are sampled in the wrong way, if they are poorly compressed or if the moon is 37 degrees too far to the south in relation to mars (I don't find this last reason to be too likely, though).

Or is it supposed to be like this and I'm just overestimating the value of packing textures this tight? Is is supposed to look this shit?

I decided to post this here as well as in the Unreal Engine sub-forum, since I figured that this doesn't necessarily have to be Unreal Engine exclusively (and I'm hoping that more people will see this and help out if I spam a little). I'm too stubborn to not resolve this.

I'll give the short version of the other thread here; I'm trying to pack my textures like this:

Base Color in RGB, height in Alpha.

Normal map in RG, AO in B and roughness in Alpha.

I'm doing this to save texture memory-space - etc-etc.

I've seen this done correctly by others in UE4. But I can't seem to get it to work without a major visual down-grade. When I compress my normal map in Unreal Engine 4, using the BC7 compression setting (or the DXT1/5, BC1/3 setting, for that matter) the R and G channel (where the normal map exists) gets shafted extremely hard. The first sample here is the uncompressed texture, viewed in UE4 and the second one is the compressed version.

Material:

Texture:

For a more extensive explanation of the issue, please visit my other thread in the unreal engine sub-forum! http://polycount.com/discussion/184004/normal-map-compression

EDIT: I'm including the full explanation from the other thread here as well, to avoid misinformation or lack of information:

Daniel_Swing said:

Intro:

I've already spent 2 days trying to understand

this and lost sleep over it. I have read through the relevant (and more)

polycount wiki articles, unreal engine documentation, plenty of forum

threads both here and on the unreal forum and I've also read up on

texture compression in general. But I feel like there's something I'm

missing that I just can't figure out by myself. So I'm turning to you

guys for help.Goal:

I'm trying to pack my textures in a smart way, so that I'll only need to load 2 textures for each of my materials:-Base Color in RGB and height in A.

-Normal map in RG, AO in B and roughness in A.

Background:

I'm sure many of you have seen this done correctly before. I've seen this done correctly

before (here is an example of someone who has done it correctly before:

http://polycount.com/discussion/comment/2521188/#Comment_2521188). I just don't know what I'm doing wrong.I packed the channels in Substance Designer (I've tried exporting with both default format and DXT5, if that's even relevant) and double checked and controlled that the channel packing is correct in Photoshop with both png and tga files. - With that said, I don't think there's anything wrong with the packing.

In Unreal, I use this shader and these compression settings:

The normal/AO/roughness texture is set as "Sample Type: Linear Color" (as I get error messages otherwise).

As far as I know, this should be right and should do the trick. Side-note: I don't find there to be any difference between the BC7 (which I've been told to use) and the default setting (DXT1/5,BC1/3). The HDRCompression setting (BC6) renders a better result but also shoots the resource size through the roof.

The problem is that it looks shit:

I was prepared for a visual down-grade and less accuracy within the normal map (since the blue channel needs to be calculated through the RG channels). But the results I'm getting are far below what I was expecting.However, I quickly noticed that this was only the case after I saved/compressed the texture within Unreal (the uncompressed file, right after import, renders great results, almost indistinguishable from how it looks without packed textures):

The first image shows what the texture should look like (screen shot taken with non-packed textures) and the second shows what the texture looks like (screen shot taken with packed textures with the given shader above).

Here are samples of the packed normal map, uncompressed (the first one) and compressed (the second one, using BC7 compression):

I further tracked this to the R and G channel being the issue. Here are some samples of each of the four channels. The first one is "how it should look" (what it looks like in the non-packed textures) and to the second one is what it looks like (in the packed textures). Please note: The image showing the packed channel (the second one) is at the point in the shader after I multiply the channel by 2 and add -1.

R:

G:

B:

A:

As we can see, the issue appears in the R and the G channel (only in the normal/AO/roughness map, not in the base color + height map, which uses the same compression with the exception that it's checked as sRGB and is set to "Sample Type: Color" instead of "Sample Type: Linear Color" in the shader). I don't know what is happening here, if the channels are for some reason rendered at a lower size than they should, if they are sampled in the wrong way, if they are poorly compressed or if the moon is 37 degrees too far to the south in relation to mars (I don't find this last reason to be too likely, though).

Or is it supposed to be like this and I'm just overestimating the value of packing textures this tight? Is is supposed to look this shit?

Replies

you can store your roughness or AO into the alpha if you like but that's all.

This way, you could do RGB base colour, RGB metallic/roughness/AO and normal map. If you don't need a metallic input substitute with the heightmap in there instead of alpha because DXT5 is literally twice as big as DXT1.

@fuzzzzzz : I'm not too sure what you mean. This is my compression settings as of now; do you mean the "Texture Group" should always be "world normal" (because it is) or do you mean something else?

@Millenia : What confuses me, though, is that these artifacts are only present in the R and G channel of my normal+AO+roughness map, not the blue and alpha or in any of the channels in my base color + height map.

Color+Normal+RMA (which I've learned now these two last days should be MRA, like you said) was my usual 3 image input set up. But I wanted to go even lower while being able to fit 5 images into 2 textures, since I know that the Blue channel can be re-built in the shader.

Again, thank you for your responses. I was convinced that I have been doing something wrong. But if this is as good as it gets, I'll go back to using 3 maps.

EDIT: Added the full explanation from my initial post in my other thread into this thread as well.

http://www.fsdeveloper.com/wiki/index.php?title=DXT_compression_explained

Try to put normal map to Green and Alpha channels.

Basically the compression takes the Red and Green channel of your imported normal map and apply a re-normalisation which determine the blue channel for each pixel.

It mean that r+g+b is always equal to 1. so with only R+G you can know the value for B for each pixel and this is what the compression does.

UE4 do not need blue channel because it can to be determined just with Red and Green.

Did you noticed that you Blue channel after the compression should not reflect the original AO map from the Blue channel before the import ?

Either AO and roughness should not be set as "world normal texture" for the texture group.

Last point, depending of the baker you used to bake the normal map if the algorithm is correct and conventional, the red and green should be correct with the color space, and you would not get any issue like harzard668 as mentioned.

@hazard668 : Thank you! Would packing normal in green and alpha allow me to use the red and blue channel for something else? Or would these other gray-scaled maps be ruined by the normal map compression? (I'm a bit slow, it seems).

@fuzzzzzz : Thanks again for your reply! About the AO: Now that you mention it, it's not as sharp and dark as it should be. Is there a way to split the channels into different texture groups or is this the (/one of the) reason not to pack other textures into normal maps?

EDIT: Should I just stick to 3 textures per material? Seems much easier...

If you're channel packing your normal map, make sure that texture is stored as linear and that after sampling and before deriving the Blue channel, bring that from 0 to 1 range to -1 to 1. That means, append normal's R and G, run them through a ConstantBiasScale node of -0.5/2 and plug that into a DeriveNormalZ node.

If you're looking into reducing texture samples without sacrificing normal quality, you can store the normal map's R and G channels into the A channels of 2 different textures. For example, texture 1's RGB = BaseColor, A = NormalR. Texture 2's RGB = AO, Roughness, Metallic, A = NormalG.

It's okay if you sample your BaseColor texture as sRGB, the Alpha channel is always linear if I'm not mistaken.

I haven't solved my problem yet (and I still haven't gotten my head around all this about texture compression), I've just put that entire project on hold for a very long time.

Quick question: Won't adding an alpha channel to a texture double the file size? (or at least when compressing it to keep all the information in all RGB+A channels?) If so, wouldn't BaseColor+MRA with alphas take up more texture memory than three RGB textures? (and in that case, why am I even trying to do this...? o_o)

Check this out, might help: http://www.reedbeta.com/blog/understanding-bcn-texture-compression-formats/

It wasn't until about an hour after the interview was over that I realized that I should probably have talked about this specific problem.

It just so happens that I, very recently, returned to this project after about two years of a break. So I figured that I'd give it another shot.

Thanks to all of you who helped me out in this thread!