vertex colors vs bitmap texture - cost/performance considerations?

just wondering if there's any general rule when it comes to the performance/memory overhead for using vertex colors over a bitmap in materials.

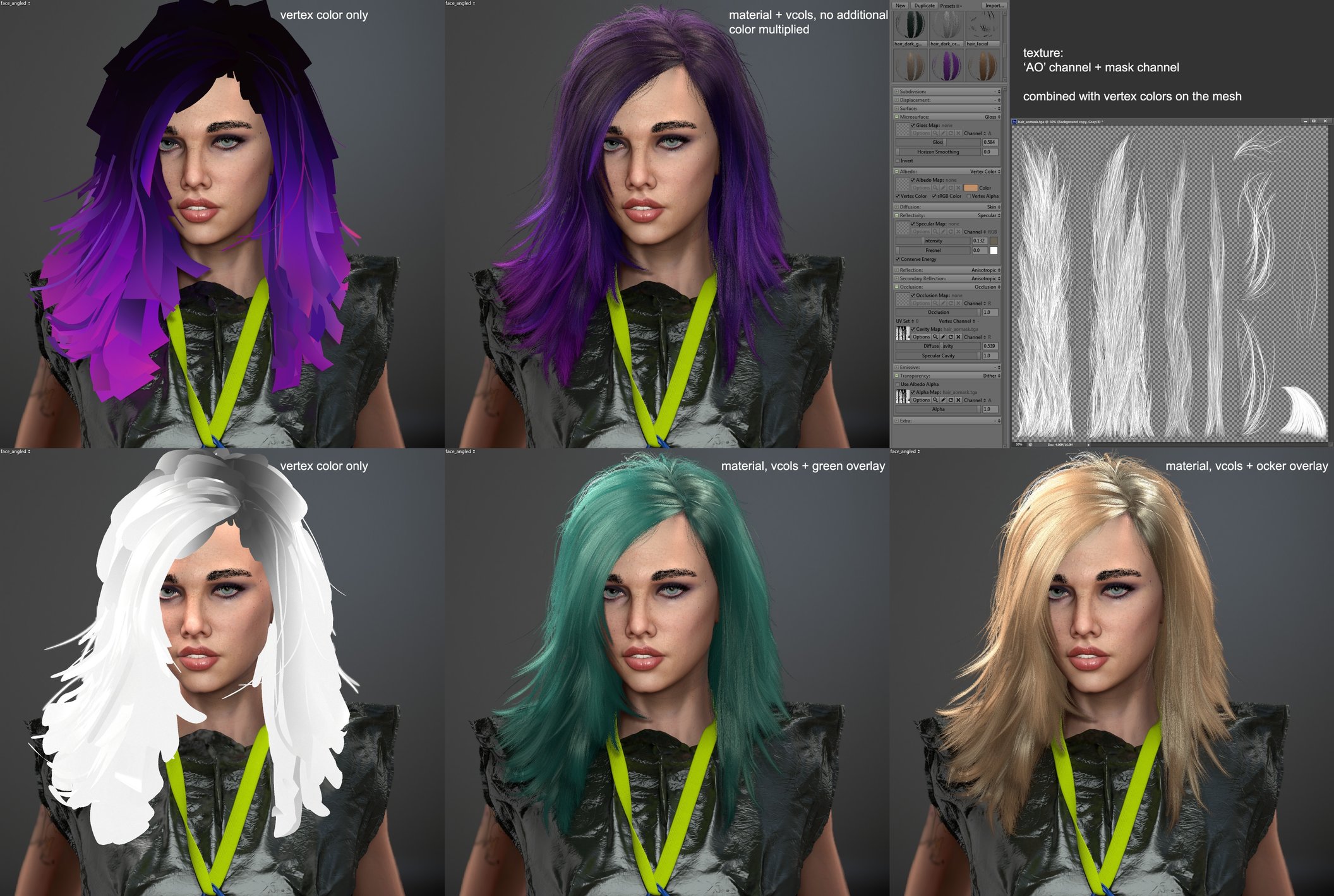

there's currently a single 2048 texture that i'm using in a hair material. it contains two 8bit/greyscale channels, one for a painted texture overlay that supplies strand detail, the other is the alpha mask. i combine that with vertex colors stored in the mesh (around 9500 vertices) and am able to multiply a color value on top of this in the material itself.

the approach seems pretty handy to me because when modeling hair you always end up reusing geometry and a color texture on the same UV set just makes that very obvious. vertex colors on the other hand help to give the strands a unique look and are more quickly doodled onto the mesh than creating a texture.

like so:

in my mind using vertex colors must be a lot cheaper than loading in another bitmap to define the strand color but is there a way (preferably in unreal) to profile this or do we have a general rule of thumb for 2016-era engines/hardware? everything i can find regarding vertex color seems to be pretty old and possibly outdated.

in my case the mesh would obviously have to be animated, whichever way, if that changes things. also, in this example it's broken up into an object that casts shadows and a much smaller one sharing the same material that does not.

needless to say - i've looked at a fair few assets from various games and nobody seems to be using vertex color this way.

there's currently a single 2048 texture that i'm using in a hair material. it contains two 8bit/greyscale channels, one for a painted texture overlay that supplies strand detail, the other is the alpha mask. i combine that with vertex colors stored in the mesh (around 9500 vertices) and am able to multiply a color value on top of this in the material itself.

the approach seems pretty handy to me because when modeling hair you always end up reusing geometry and a color texture on the same UV set just makes that very obvious. vertex colors on the other hand help to give the strands a unique look and are more quickly doodled onto the mesh than creating a texture.

like so:

in my mind using vertex colors must be a lot cheaper than loading in another bitmap to define the strand color but is there a way (preferably in unreal) to profile this or do we have a general rule of thumb for 2016-era engines/hardware? everything i can find regarding vertex color seems to be pretty old and possibly outdated.

in my case the mesh would obviously have to be animated, whichever way, if that changes things. also, in this example it's broken up into an object that casts shadows and a much smaller one sharing the same material that does not.

needless to say - i've looked at a fair few assets from various games and nobody seems to be using vertex color this way.

Replies

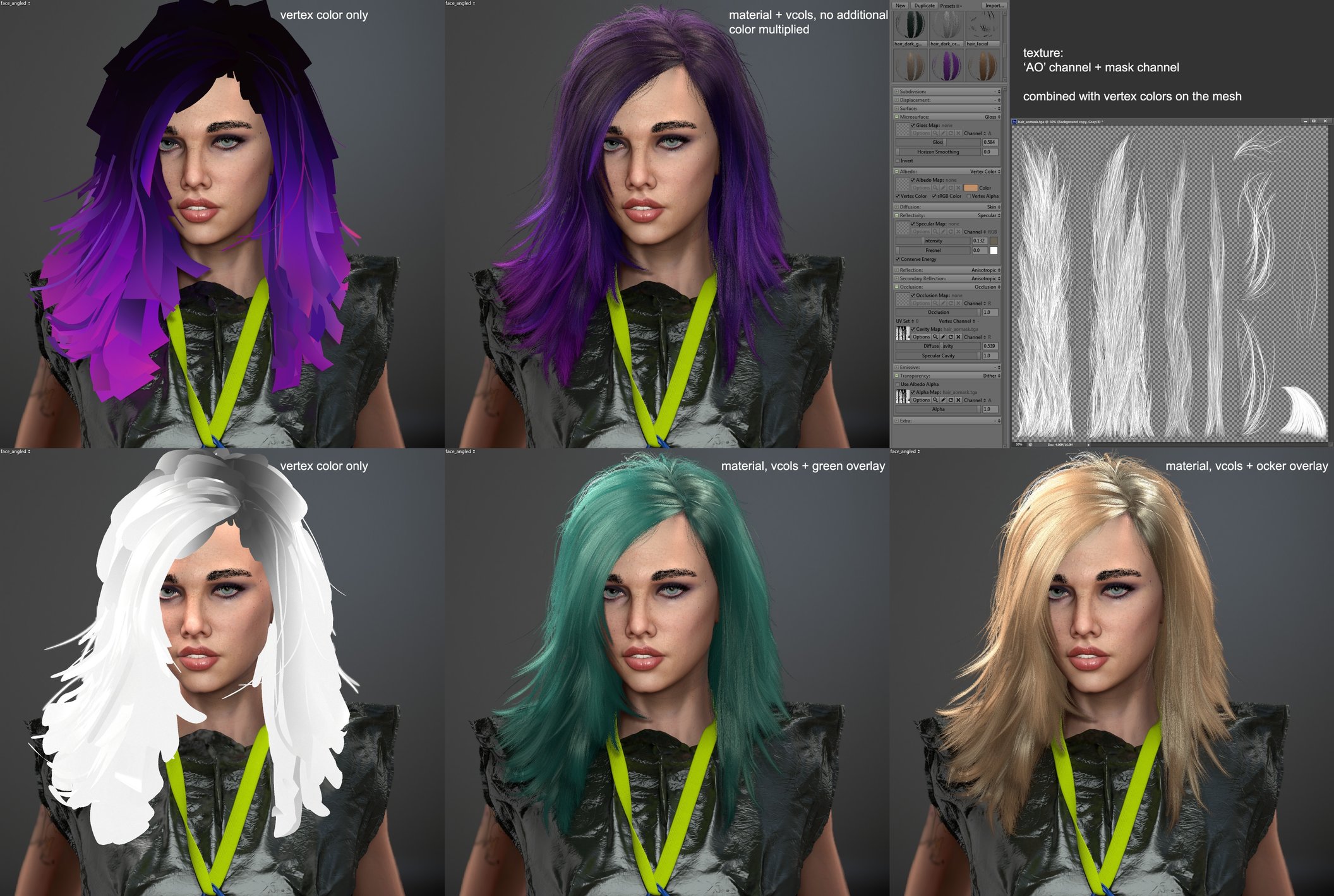

still, preferable for unique assets it would seem. time and texture space saved. literally a 5 minute quickie - 9200 vertices, 256 texture for AO, normals, mask -

But, there are some limitations that you already pointed.

What can be a little more expensive, is how you use the vertex color data, for example, alphas. But still faster than textures.

But yeah sometimes vert color is used for other stuff in the shader, like offset of the verts or AO etc.

Packing your textures into RGBA can give you some bad compression artifacts though, depending on the platform. Especially if trying to pack a normal map and other data together. DXT5 for example is pretty ugly with normal maps.

seems i need to come up with a name for my method quickly so it can be credited properly in some soon (...) to be shipped game.

http://wiki.polycount.com/wiki/Normal_Map_Compression

I call it Channel Packing. Nobody's got a final term though, lots of variants out there.

http://wiki.polycount.com/wiki/ChannelPacking

I've heard some artists call it Swizzling, but that's a bit of a different thing... basically re-ordering the color channels, red into alpha, green into blue, etc.

Here are some questions I have

-How did you get your vertex on to your hair planes? I read that they're stored into the mesh, so does that mean you painted the haircards in zbrush?

-You mentioned in your artstation post that it was based on fiber mesh then refined manually, Could you explain that a bit more @thomasp ?

@Eric Chadwick

The way I'm understanding everything above is that Thomas used Channel Packing to get his results by putting the detail in a grey scale channel, and the alpha in the only other channel, then pointed to those channels in the shader in marmoset. And that Channel Packing saves memory, at the expense of Draw calls?

Also is there any way I could get my hand on some of the files used to make this project? I have an easier time understanding whats going on if I can "reverse-engineer" it. If not, is there a nother source I can see or practice on?

Greyscale isn't a channel, at least in game art usage. You can store a texture in two channels (grey + alpha) but the graphics card will always convert a runtime texture to RGBA, so you're only saving space on disk not in-game.

Yes, Toolbag can extract individual channels.

Channel packing saves memory because you can pack 3 or 4 grey textures into one RGB or RGBA file.

RGBA uses more memory than RGB, depending on the game texture format (lots of different compression options out there). In the game I'm currently working on, I'm using RGB instead of RGBA to pack my greyscale terrain tiles because it's about 1/2 the texture memory, and multiplied by a bunch of terrains in our game that ends up a big savings.

Draw calls depend on a lot of things... how the shader is setup (how many different effects there are, are you using alpha blending, etc.), how the game rendering is setup (forward vs. deferred, etc.), how many different materials you have in a scene, etc. I think in general, channel packing doesn't change the number of draw calls. I haven't looked into that tbh. The memory savings can be huge though.

I show some more examples of channel packing in my sketchbook. If you don't mind looking at shader graphs.

as far as two channels. I guess I have to convert my image to Grey scale for me to see the grey channel. cause right now I'm only seeing red Green and blue channels and the alpha. am I understanding this correctly?

re: vertex colors - you could use zbrush for that, yes. however, trying to alter colors or layering is a bit of a challenge in there IMO. but you can transfer the mesh with polypaint intact into other applications (e.g. i recall a script for max for doing that).

personally i used a custom blender compile with a few extra features/fixes for vertex color painting that someone over at the blenderartists was kind enough to provide (default blender did not paint 'through' the mesh). i had to alter the colors a couple times because they looked completely different in marmoset so with zbrush handling it and then transferring to a rigged model prior to re-export this would have been nasty if done with pixologic's "advanced polypainting".

re: fiber mesh - i've experimented with that with the aim to just transfer over geometry or curves and almost have it 1-click, without the endless tweaking. not successful though. what i settled on instead was to export fibermesh as curves, bring that into the 3d app and use it as source for creating some of the geometry and for the main strands as guides to conform prepared alpha cards along them. then tweak vertices manually excessively.

it saves time early on over doing everything manually to get volume and silhouette right and you can use some of it to generate geometry from splines but the bulk of the work is still all manual if you want this to look tight and not like a generated thing. still use mainly this technique.

perhaps in a few years when we are able to throw six-figure polycounts just at hairstrips (without a programmer chasing us around the office with a baseball bat) can the whole thing finally be handled by styling in fibermesh or a hairsystem and then more or less just converting that.

As for the vertex colors, it took me a second, but I learned how to do it in Modo, the program I use.

This is the result I got.

Theres some weird stuff going on about the top of the hairs, I'm not sure why its appearing.