We Got Tired of “Final” Renders Breaking — So We Built a Gate Instead

If you’ve shipped enough work, you’ve seen this moment:

You approve a render.

Someone changes one small thing.

The final output doesn’t match what you signed off on.

No one did anything “wrong.”

But now you’re explaining differences to a client.

That’s the problem we wanted to solve.

The Real Issue Isn’t Quality — It’s Trust

Most render problems aren’t obvious failures.

They’re subtle mismatches:

-

lighting feels different

-

reflections behave oddly

-

materials don’t respond the way they did yesterday

And the worst part?

You often don’t know when the trust broke.

“Final” becomes a feeling instead of a guarantee.

What If Approval Actually Meant Something?

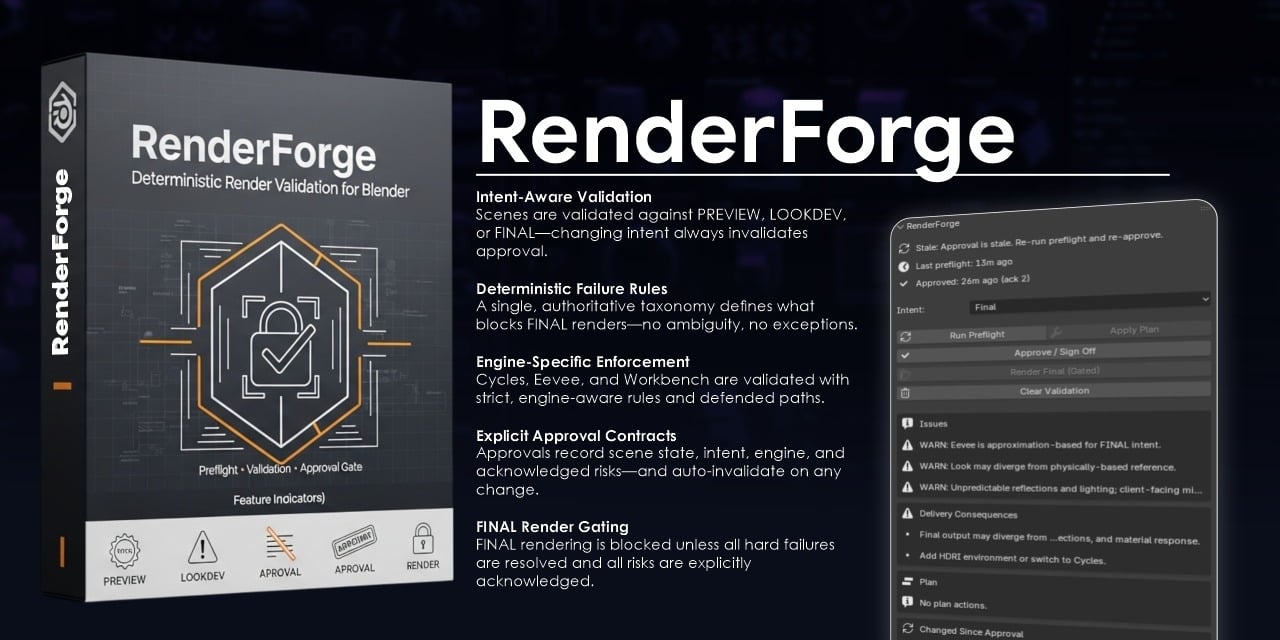

RenderForge is an attempt to make approval explicit.

Instead of just saying “looks good,” the system asks:

-

What engine are you using?

-

What’s the intent of this render?

-

What assumptions does that engine make?

-

What risks are you accepting?

If the scene changes after approval, RenderForge marks it stale.

Not to punish — to protect.

Eevee Isn’t the Enemy (Silence Is)

One of the biggest pain points is Eevee.

Eevee is fast. Eevee is useful.

Eevee is also approximate.

Instead of pretending that doesn’t matter, RenderForge makes it visible:

-

Eevee FINAL is allowed under clear constraints

-

Remaining risk is shown in plain language

-

Approval requires acknowledging that risk

No surprise differences. No blame game.

Why This Helps Artists, Not Just TDs

The goal isn’t to slow anyone down.

It’s to:

-

reduce rework

-

make approvals stick

-

stop late-stage “how did this change?” conversations

-

give artists cover when something was approved correctly

When a render goes out, everyone knows what it represents.

Not a Silver Bullet — Just Fewer Surprises

RenderForge doesn’t fix art.

It doesn’t auto-correct scenes.

It doesn’t replace taste or experience.

It just makes “final” mean what everyone thinks it means.

If you’ve ever had a “but it looked fine yesterday” moment, you’re not alone. Curious how other teams handle approval and late-stage changes.

Replies